Latest News

August 9, 2013

By Dr. Wolfgang Gentzsch, Dr. Dennis Nagy and Burak Yenier

The UberCloud CAE Experiment is making high-performance computing (HPC) available as a service on demand for engineers doing simulation. Participants of the experiment are exploring the end-to-end process of using remote computing resources in HPC centers and in the cloud for their CAE; in the process, they’re learning how to resolve many of the common roadblocks.| Why Participate in the CAE Experiment? The UberCloud CAE Experiment has been designed to discover and reduce many of the barriers mentioned in the main article. By participating in this experiment and moving engineering applications to remote computing resources, engineers can expect several benefits, including:

|

There are more than 300,000 small and medium-size manufacturing enterprises (SMEs) in the U.S. alone ” and many of them rely on workstations for their daily CAE design and development work. According to the U.S. Council of Competitiveness, more than 50% of them need more computing power from time to time. Buying an HPC cluster is not always an option, and renting computing power from HPC centers or an HPC cloud provider still comes with several roadblocks, including:

- the complexity of HPC itself;

- intellectual property and sensitive data and privacy concerns;

- massive data transfers;

- inflexible software licenses;

- performance bottlenecks from virtualized resources;

- user-specific system requirements;

- missing standards; and

- the lack of interoperability among different clouds.

On the other hand, if these roadblocks could be removed, the benefits of using remote computing resources become extremely attractive. For example:

- no lengthy procurement and acquisition;

- shifting budget from capex to opex;

- gaining business flexibility (getting additional resources on demand); and

- scaling remote resource usage automatically up and down according to actual needs.

The Purpose of the Experiment

To better understand and overcome these roadblocks, the open, voluntary and free UberCloud CAE Experiment was started in July 2012. The experiment actively promotes the wider adoption of digital manufacturing technology to the so-called “missing middle” of SMEs. It is an example of a grassroots, crowd-sourced effort to foster collaboration among engineers, HPC experts and service providers to address challenges at scale, with the aim of exploring the end-to-end process employed by digital manufacturing engineers to access and use remote CAE computing resources in HPC centers and in the cloud.

In the intervening year, the UberCloud HPC Experiment achieved the participation of 500 organizations and individuals from 48 countries. At press time, more than 80 teams have been involved. Each team consists of an industry end-user and a software provider (ISV); the organizers match them with a well-suited volunteer resource provider and an HPC expert who serves as the team leader. Together, the team members work on the end-user’s application ” defining the requirements, implementing the application on the remote HPC system, running and monitoring the job, getting the results back to the end-user, and reporting results and key findings about the HPC process in a case study.

Detailed results are published after each three-month round and are available upon registration. Round 3 concluded at the end of June 2013, and at press time, teams were ramping up Round 4.

Engineering Teams of Rounds 1 and 2

As a glimpse into the wealth of practical results so far, here are four of the successful teams that participated in Round 1 and Round 2, demonstrating the wide spectrum of CAE applications:

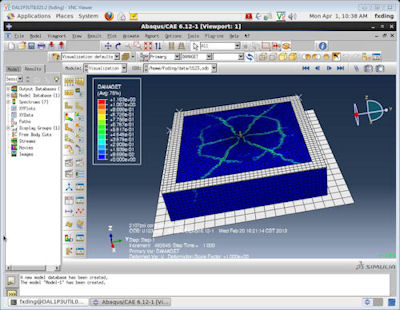

Team 1: Heavy-duty Abaqus Structural Analysis Using HPC in the Cloud

The applications in this team range from solving anchorage tensile capacity and steel and wood connector load capacity, to special moment frame cyclic pushover analysis. The HPC cluster at Simpson Strong-Tie is modest, consisting of 32 cores. Therefore, when emergencies arise, the need for cloud bursting is critical. Also challenging is the ability to handle sudden large data transfers, as well as the need to perform visualization for ensuring that the design simulation is proceeding along correct lines. The team consisted of engineer Frank Ding from Simpson Strong-Tie, software provider Matt Dunbar from SIMULIA, resource provider Steve Hebert from Nimbix, and team expert Sharan Kalwani.

Fig. 1: Structural analysis model using HPC in the cloud. |

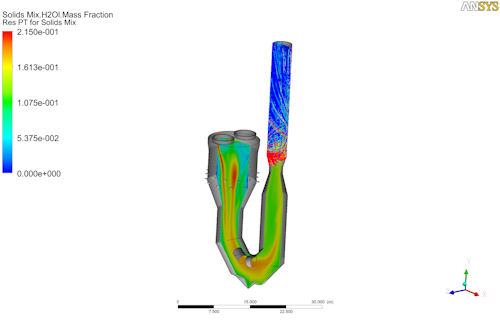

Team 8: Flash Dryer Simulation with Hot Gas Used to Evaporate Water from a Solid

Computational fluid dynamics (CFD) multiphase flow models are used to simulate a flash dryer using CFD tools that are part of an end-user’s extensive CAE portfolio. On the in-house server, the flow model takes about five days for a realistic particle-loading scenario. ANSYS CFX 14 is used as the solver. Simulations for this problem are using 1.4 million cells, five species and a time step of 1 millisecond for a total time of 2 seconds. A cloud solution allowed the end-user to run the models faster to increase the turnover of sensitivity analyses. It also allowed the end-user to focus on engineering aspects instead of using valuable time on IT and infrastructure problems. The team consisted of Dr. Sam Zakrzewski from FLSmidth; the software provider Dr. Wim Slagter from ANSYS; the resource provider Marc Levrier from Serviware, a Bull Group company; and the HPC expert Ingo Seipp from Science + Computing.

Fig 2: Flash dryer model viewed with ANSYS CFD-Post. |

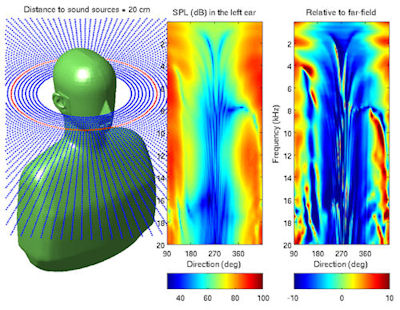

Team 40: Simulation of Spatial Hearing

A sound emitted by an audio device is perceived by the user of the device. The human perception of sound is, however, a personal experience. For example, spatial hearing ” the capability to distinguish the direction of sound ” depends on the individual shape of the torso, head and pinna (the so-called head-related transfer function, or HRTF). To produce directional sounds via headphones, one needs to use HRTF filters that “model” sound propagation in the vicinity of the ear. These filters can be generated using computer simulations, but to date, the computational challenges of simulating the HRTFs have been enormous. This project investigated the fast generation of HRTFs using simulations in the cloud. The simulation method relied on an extremely fast boundary element solver. The team consisted of an engineering end-user (anonymous) from a manufacturer of consumer products, the software provider and HPC experts Antti Vanne, Kimmo Tuppurainen and Dr. Tomi Huttunen from Kuava Ltd, and the HPC experts Drs. Ville Pulkki and Marko Hiipakka from Aalto University in Finland.

Fig. 3: Simulation model (an acoustic dummy): Dots indicate all locations of monopole sound sources used in the simulations. The red dots are sound sources used in this image. The figure in the middle shows the sound pressure level (SPL) in the left ear as a function of the sound direction and the frequency. On the right, the SPL relative to sound sources in the far-field is shown. |

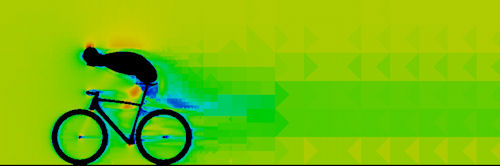

Team 58: Simulating Wind Tunnel Flow Around Bicycle and Rider

The CAPRI to OpenFOAM Connector and the Sabalcore HPC Computing Cloud infrastructure were used to analyze the airflow around bicycle design iterations from Trek Bicycle. The goal was to establish a great synergy among iterative CAD design, CFD analysis and HPC Cloud. Automating iterative design changes in CAD models coupled with CFD significantly enhances the productivity of engineers and enables them to make better decisions. Using a cloud-based solution to meet the HPC requirements of computationally intensive applications decreases the turnaround time in iterative design scenarios, and reduces the overall cost of the design. The team consisted of end-user Mio Suzuki from Trek Bicycle Corp., software provider and HPC expert Mihai Pruna from CADNexus, and resource provider Dr. Kevin Van Workum from Sabalcore Computing.

Fig. 4: Velocity color plot, generated with the CADNexus Visualizer Lightweight Postprocessor. |

DE readers are encouraged to join Round 4 of the UberCloud CAE Experiment by registering at CAEExperiment.com.

The authors’ biographies may be found on LinkedIn.com, and they may be reached at [email protected]. Send e-mail about this article to [email protected].

Info

Serviware, a Bull Group company

U.S. Council of Competitiveness

For more information on this topic, visit deskeng.com.

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

DE’s editors contribute news and new product announcements to Digital Engineering.

Press releases may be sent to them via [email protected].