Moving CAE Applications to the Cloud is Getting Easier

Latest News

July 23, 2018

By Wolfgang Gentzsch and Burak Yenier

When we started in 2012 with our UberCloud Experiments taking engineers’ CAE applications to the cloud, such an experiment took three months on average, and the failure rate was about 50%. Although the cloud ‘on-boarding’ process then was already well understood, the major and time-consuming hurdles were still unresolved, such as on-demand software licenses, multi-gigabyte data transfer, security concerns, lack of cloud expertise and losing control over assets in the cloud.

In 2015, based on our experience gained from the previous 100+ cloud experiments, we reached an important milestone when we introduced novel UberCloud software container technology based on Linux Docker containers and applied them to engineering CAE workflows. Cloud experiment teams’ use of these high-performance computing (HPC) containers dramatically improved and shortened experiment times to just a few days. Today, major cloud hurdles are well understood and mostly have been resolved, and access and use of cloud resources became as easy as in-house desktop systems. Thanks to this progress, the average CAE cloud experiment now takes about three days, and the failure rate is 0%.

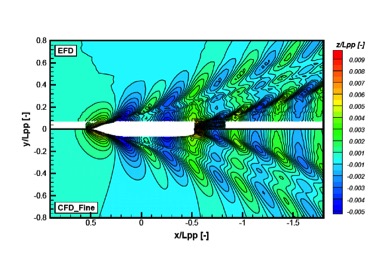

Comparison of global wave elevation between calculation and experiment. The Kelvin wake can be resolved accurately even one ship length behind the ship.

Comparison of global wave elevation between calculation and experiment. The Kelvin wake can be resolved accurately even one ship length behind the ship.Our 5th annual compendium describes 13 selected technical computing applications in the HPC cloud. Like its predecessors, this year’s edition draws from a select group of projects undertaken as part of the UberCloud Experiment and sponsored again by Hewlett Packard Enterprise, Intel and Digital Engineering. The Compendium is a way of sharing the cloud experiment results with the broader engineering community; it has just been released and is now available for download. In the following we have briefly summarized five CAE cloud experiments to demonstrate the power of cloud for engineering and scientific applications. All cloud experiments have been supported by UberCloud Professional Services experts.

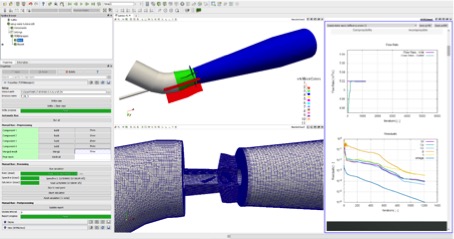

Kaplan Turbine Flow Simulation Using Turbomachinery CFD

This cloud experiment deals with an application in the hydropower and renewable energy sector. There are still many opportunities with usable hydro potential: existing hydropower plants with old obsolete turbines, new hydropower plants at an existing weir or new hydropower plants for new locations. The flow simulation inside Kaplan water turbines is calculated using the Turbomachinery CFD module from CFD Support for OpenFOAM. The flow simulation and its analysis are important for verification of turbine energy parameters, turbine shape optimization and turbine geometry changes.

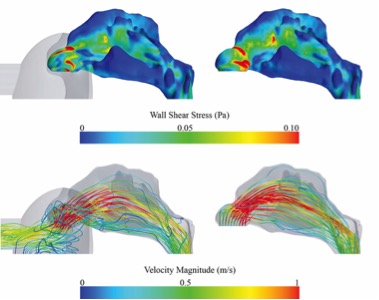

Wall Shear Stress distributions at the nasal cavity’s wall (upper panel) and velocity information visualized using streamlines (lower panel). Those distributions are shown for simulations using a simplified mask (left) as well as for simulations, where the nasal cavity was truncated at the nostrils.

Wall Shear Stress distributions at the nasal cavity’s wall (upper panel) and velocity information visualized using streamlines (lower panel). Those distributions are shown for simulations using a simplified mask (left) as well as for simulations, where the nasal cavity was truncated at the nostrils.The end user of this project team was Martin Kantor from GROFFENG (GRoup OF Freelance ENGineers), and Luboš Pirkl from CFD Support provided Turbomachinery CFD. Simulations ran on the Advania Data Centers HPCFLOW Cloud in Iceland (represented by Aegir Magnusson and Jon Tor Kristinsson), supported by UberCloud.

Table 1: Time duration of simulation

| Computer | 1,000 iteration [minutes] |

| Local system (1 core) | 90 |

| Cloud (2 cores) | 80 |

| Cloud (4 cores) | 34 |

| Cloud (20 cores) | 20 |

Maneuverability of a Container Ship

The aim of this UberCloud Experiment #201 was to verify the feasibility of overset grids for direct zigzag test using an “appended” KRISO Container Ship (KCS) model by means of the NUMECA UberCloud container on the cloud. The commercial CFD software FINE/Marine packaged by NUMECA International S.A. was employed during this experiment. All simulations were run on the newest software version 6.2, which features improved robustness. Meanwhile, the goal was to accelerate simulations through the HPC Cloud resources provided by UberCloud Inc. and NUMECA.

This project team #201 consisted of the end user Xin Gao from the Dynamics of Maritime Systems Department at the Technical University of Berlin, Germany. Software and resource provider was Aji Purwanto from NUMECA International in Belgium, and technology expert was Sven Albert from NUMECA Engineering in Germany.

CFD Simulation of Airflow

In this cloud experiment the airflow within a nasal cavity of a patient without impaired nasal breathing was simulated using Siemens’ STAR-CCM+ software container on a dedicated compute cluster in the Microsoft Azure Cloud. Because information about necessary mesh resolutions found in the literature varies broadly (1 to 8 million cells) a mesh independence study was performed. Additionally, two different inflow models were tested. However, the main focus of this study was the usability of cloud-based HPC for numerical assessment of nasal breathing.

The project team consisted of the expert team from the Charité University Hospital, Berlin, Germany: Jan Bruening, Dr. Leonid Goubergrits and Dr. Thomas Hildebrandt; the software provider Siemens (formerly CD-adapco) providing STAR-CCM+; and the cloud provider Microsoft Azure with its UberCloud STAR-CCM+ software container.

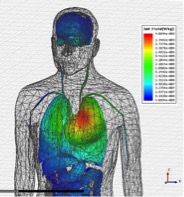

Implantable Planar Antenna Simulation

For this case study, design modification and tuning of a Planar Inverted F Antenna (PIFA) have been performed in the cloud and studied with the implantable antenna placed inside the skin tissue of an ANSYS human body model. The resonance, radiation and specific absorption rate (SAR) of implantable PIFA were evaluated. Simulations were performed with ANSYS HFSS (high-frequency structural simulator), which is based on the finite element method (FEM). ANSYS HFSS has been packaged in an UberCloud software container and ported to a 40-core server with 256GB RAM. These simulations were four times faster than on the local 16-core desktop workstation. The project team consisted of the Ozen Engineering team in Sunnyvale, CA, and the UberCloud professional services team providing the ANSYS HFSS container running in the OzenCloud.

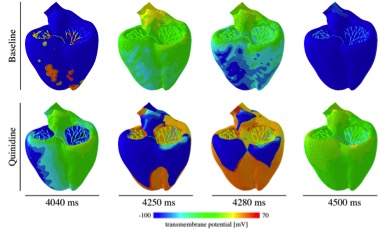

Studying Drug-Induced Arrhythmias of a Human Heart

The Stanford team in conjunction with Dassault Systèmes SIMULIA have developed a multi-scale three-dimensional model of the heart that can predict the risk of this lethal arrhythmias caused by drugs. The project team added several capabilities to the Living Heart Model such as highly detailed cellular models, as well as the ability to differentiate cell types within the tissue and to compute electrocardiograms (ECGs). A key addition to the model is the so-called Purkinje network. It presents a tree-like structure and is responsible for distributing the electrical signal quickly through the ventricular wall. It plays a major role in the development of arrhythmias, as it is composed of pacemaker cells that can self-excite. The inclusion of the Purkinje network was fundamental to simulating arrhythmias. This model is now able to bridge the gap between the effect of drugs at the cellular level to the chaotic electrical propagation that a patient would experience at the organ level.

Figure 5: Evolution of the electrical activity for the baseline case (no drug) and after the application of the drug Quinidine. The electrical propagation turns chaotic after the drug is applied, showing the high risk of Quinidine to produce arrhythmias.

Figure 5: Evolution of the electrical activity for the baseline case (no drug) and after the application of the drug Quinidine. The electrical propagation turns chaotic after the drug is applied, showing the high risk of Quinidine to produce arrhythmias.The project team consisted of Francisco Sahli Costabal and Professor Ellen Kuhl from the Living Matter Laboratory at Stanford University, Dassault Systèmes SIMULIA with Tom Battisti and Matt Dunbar providing Abaqus 2017 software and support, Advania Data Centers HPCFLOW Bare Metal Cloud in Iceland (represented by Aegir Magnusson and Jon Tor Kristinsson), and supported by UberCloud with providing novel HPC container technology for ease of Abaqus cloud access and use. Hewlett Packard Enterprise, represented by Bill Mannel and Jean-Luc Assor, sponsored this successful cloud experiment.

For more info, visit UberCloud at: TheUberCloud.com and download the 2018 Compendium.

Wolfgang Gentzsch is president and co-founder of The UberCloud. Burak Yenier is co-founder and CEO of The UberCloud.

More Simr Coverage

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

The Turbomachinery CFD GUI.

The Turbomachinery CFD GUI. Local Specific Absorption Rate (SAR) distribution on upper side of male body model.

Local Specific Absorption Rate (SAR) distribution on upper side of male body model.