Test Benches Enable Technology ‘Drivers’

Testing addresses advanced driver assistance systems with greater efficiency.

Latest News

June 1, 2013

Carmakers and their suppliers continuously confront greater complexity in their vehicles—as well as the need to reduce time to market, contain costs, satisfy customers and cope with increased regulatory pressures. One of the newest approaches for improving vehicle safety, advanced driver assistance systems (ADAS), involves all of these aspects. It has also attracted global interest: Market research firm ABI Research projects global ADAS revenues to reach $460 billion by 2020, up from $22.7 billion in 2012.

Festooned with vision, RADAR, LIDAR and other sensors, Carnegie Mellon University’s Boss vehicle was the winner of the 2007 DARPA Urban Challenge. Image courtesy of Tartan Racing. |

While addressing the increased complexity of ADAS, test benches save time and reduce the cost of developing these systems. New technologies rely on sensor systems to alert drivers to dangers, such as potential collisions, inadvertent lane changes and blind spots. Testing these systems to satisfy carmakers’ quality requirements and meet regulatory safety requirements takes frequent rounds of physical testing. Virtual test benches have demonstrated the capability to reduce the amount of real-world evaluations.

Defining/Specifying ADAS

Perhaps the safest vehicles are those that take the driver out of the equation. In spite of the success of innovations such as the 2007 winner of the Defense Advanced Research Projects Agency (DARPA) Urban Challenge for a vehicle to complete a course without driver interaction, Carnegie Mellon University’s Boss vehicle developed by Tartan Racing, and Google’s self-driving car, these vehicles are a long ways away from consumer sales. The Association for Unmanned Vehicle Systems International (AUVSI) is working toward having autonomous vehicles as a viable means of transportation by 2022.

| ADA on the Way Advanced driver assistance systems that have already been implemented in production vehicles or are in development for near-term vehicle introduction include:

|

Autonomous vehicles are the ultimate implementation of ADAS technology. Today, many aspects of ADAS are already on high-end vehicles. Vehicle original equipment manufacturers (OEMs) and their suppliers use test benches from different software tool companies as well as their own custom solutions to bring systems to market sooner—and with lower and/or contained costs with each successive generation of technology.

For development and verification of system design, automotive OEMs and their Tier I suppliers use the V-model. This tool helps ensure that the system meets design specifications—and that it performs as anticipated.

“When you look at the whole broad spectrum of modeling, there is the design side and then there is the verification side,” says Jim Raffa, global engineering director for driver assistance systems at Magna International’s Magna Electronics operating unit. “When you think about test benches, it really is more on the verification side and simulation side.”

Mark Lynn, chief engineer of active safety at Delphi Automotive Systems, notes there are four different phases of verification “that we would do for an active safety product or feature that will use them. A few of them are pretty common with any controller that you would apply to a vehicle.”

Hardware-in-the-loop (HIL) verification and vehicle performance testing are areas more specific to active safety systems. “Those are the things where there are unique methodologies that we do that are different in active safety than we would do in a body controller or crash-sensing controller,” says Lynn.

Visualizing the Environment

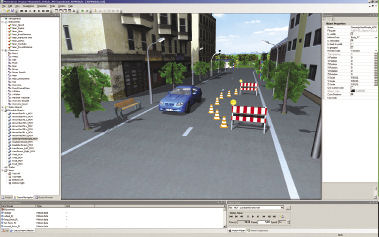

Unlike many other vehicle systems, ADAS depends on visual inputs to determine how the systems react. One of the ways to evaluate that visualization is through a tool such as dSPACE MotionDesk. MotionDesk reads the data from simulation tools and animates movable objects including the vehicle, wheels, steering wheel and more in real time in a virtual 3D environment.

A new rendering engine substantially improves the visualization capability of MotionDesk. According to Holger Krumm, product engineer test and experiment software product manager at dSPACE, MotionDesk’s rendering capability has been increased from 20 to 60 Hz. “Now we are directly scaling with modern graphics hardware,” says Krumm.

The drag-and-drop capabilities of MotionDesk’s graphical user interface allow users to add elements to the scene. |

Customers perform the scene management and determine how the expanded capability is used. For example, complex trees take a lot of rendering time, so the customer may or may not choose to have them in the scene. “The customer can adapt his scenarios so they are running at his desired frame rates,” says Krumm. “Our functionality to making it possible to fix it, for example to 20 or 30 or 60 Hz, comes directly from the demands that the camera system needs to steady.”

In Europe, the Ford Focus is one of the cars with the largest portfolio of functionality for driver assistance systems. Its cameras need 30 Hz, in contrast to modern stereo cameras that need at least 60 Hz, Krumm offers as an example. The improved capabilities of MotionDesk allow the evaluation of multi-track maneuvers.

HIL Testing

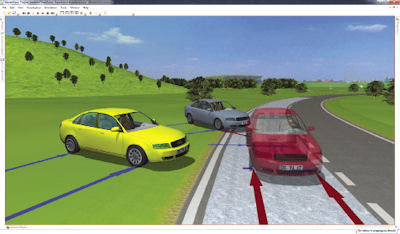

With HIL, the visualization of a vehicle dynamic situation allows system engineers to easily determine whether the vehicle is behaving properly or drifting to one side or another.

MotionDesk’s hardware access panel allows a simple connection to a HIL simulator to obtain information about a maneuver, as well as model and generated scene information to visualize the car’s reaction. “If we are talking about vehicle dynamics, you can do this for every mechanical system,” says Krumm.

Rather extensive collaboration and partnering occurs in software tools for the automotive space. For example, Mentor Graphics products can address some of the ADAS problems, including HIL simulations; it works in close cooperation with other tool suppliers. The company’s System Vision allows users to model, simulate and analyze mechatronics systems.

“What we provide gives you the fidelity of the model you are trying to simulate in representing the physics of what you are trying to represent,” says Subba Somanchi, principal engineer in the System-Level Engineering Division of Mentor Graphics.

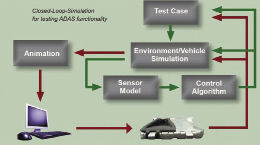

Berner & Mattner’s recently released MESSINA, a 3.7 test platform for HIL and software-in-the-loop (SIL) tests, also supports closed-loop evaluations. The tool allows vehicle OEMs and their suppliers to perform automated evasive maneuver testing in the laboratory. The simulation of real-time driving scenarios takes advantage of MATLAB/Simulink C-API (version 2011a) with a standardized interface.

Delphi’s Lynn acknowledges that HIL testing is typically done at the vehicle OEM. Delphi engineers use the OEM’s setup because it requires all of the control units that go on a vehicle, and it is performed on a tabletop rather than in a vehicle. “It’s there that you can really test things like closed-loop control algorithms,” he says. “When we are talking about algorithms for auto braking or adaptive cruise control, then you need that level of interaction.”

Rolling Their Own

Even with all the tools and test benches that the software industry provides, some automakers and their Tier I suppliers still develop their own custom solutions. For example, Delphi’s data visualization (DV) tool is a custom product developed over eight generations of active safety systems.

“We use it to collect data in a particular format, and visualize what the sensor sees around the vehicle,” says Lynn. “The purpose of collecting all this data is, in the process of doing this, you are going to surface performance issues.”

Delayed by 0.5 seconds, the red car is simulated with Electronic Stability Program (ESP) support and stays on track. The yellow and grey car were simulated without ESP support. |

After performing real-world profile testing and collecting large quantities of data, Delphi engineers develop test scenarios to evaluate ADAS features. For some functions, such as collision-imminent avoidance with full authority braking, this can involve as much as 1 million km of data collected in the real world to demonstrate the right level of confidence in the system for functional safety. The bench testing seeks to answer critical operational questions.

“Does the system actuate in the manner that you want when it is supposed to, and does it provide the right level of performance?” Lynn notes. “Then you have the reverse of that: You want to make sure that the system doesn’t actuate when it shouldn’t under all conditions. And that’s almost the more important of the two criteria.”

Closed-loop simulation tests various aspects of ADAS features, including sensor models and the control algorithms. |

Magna International also develops its own custom test benches. “As we move forward, we’ve been putting together our own hardware-in-the-loop type systems that will record and play back video,” says Raffa. “We’re also working on systems that will record external driving information like location and weather conditions.”

The extent of custom tools depends on how well software tool suppliers have addressed the individual requirements of automotive suppliers.

On the Horizon

So, what’s the bottom line on software tools and testing when it comes to cost and time?

“The primary driver for extending ADAS for more volume, more broader applications across vehicles is generally going to be component cost or regulatory drivers—and those things are happening today,” says Lynn. “But it does address our ability to reduce the development cost steps. That’s an element of the final price to the OEMs. It’s not a huge factor, but it is a factor.”

Reduced vehicle testing through the increasing use of test benches contributes to reducing development time and costs. “We use modeling and simulation tools throughout the development process to reduce our development time, and ultimately our development costs,” says Raffa.

Time is perhaps a more significant factor to Tier I suppliers and their automotive customers, he points out.

“Anytime you can reduce the amount of time I have to spend in a vehicle—and you do have to verify these algorithms in a vehicle—but if you are able to spend that time in a vehicle, create a video library of all these different drive scenarios, and then be able to play those back on the bench, it just saves a tremendous amount of time,” says Raffa.

After developing eight generations of ADAS technology, Delphi’s Lynn sums up the time-to-market situation. “It is difficult to measure what the time-to-market reduction is, but all the things we’ve learned over time and all of the tools that we’ve implemented, have been mechanisms, methods to allow us to meet the increased expectations from vehicle OEMs for faster and faster market introduction,” he concludes. “It’s been to keep pace with their growing expectations.”

Randy Frank is a contributor to DE. Send e-mail about this article to [email protected].

More Information

Association for Unmanned Vehicle Systems International

Berner & Mattner Systemtechnik GmbH

Defense Advanced Research Projects Agency

Google test-driven car (video)

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

Randy FrankRandy Frank is a freelance technology writer based in Arizona. Contact him via [email protected].

Follow DE