Latest News

August 1, 2016

The age of ‘Big Data’ is here: data of unprecedented size is becoming ubiquitous, which brings new challenges and new opportunities,” observed Peter Richtárik of the University of Edinburgh’s School of Mathematics and Zheng Qu of the University of Hong Kong. “With this comes the need to solve optimization problems of unprecedented sizes.”

The two Big Data experts, organizers of an “Optimization and Big Data 2015” workshop, continued by writing: “Machine learning, compressed sensing, social network science and computational biology are some of many prominent application domains where it is increasingly common to formulate and solve optimization problems with billions of variables.”

In the engineering of discretely manufactured products, few topics today are more ubiquitous than how to prepare for the age of the Industrial Internet of Things (IIoT) and Industry 4.0. The largest effects of these developments, most centrally the resulting tsunami of data about product performance in service, have yet to hit. But when they do, the impact—and opportunity—for both users and developers of design space exploration and design optimization technologies could be transformative.

Data Provides Higher Confidence in Design Space Exploration

Before optimizing a design, it can be useful to employ design space exploration—a family of quantitative methods that help engineers gain a better, more complete understanding of a new product’s potential design space by discovering which design variables will have the greatest impact on product performance.

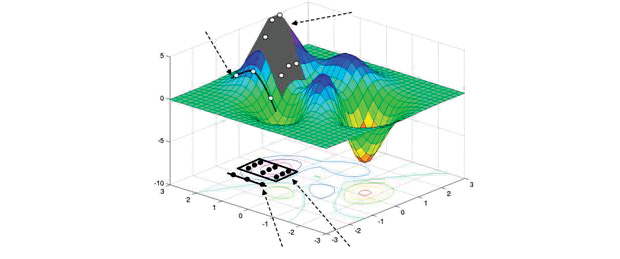

The essential quantitative method for design space exploration is design-of-experiment (DOE) studies. In a DOE study, an analysis model is automatically evaluated multiple times, with the design variables set to different values in each iteration. The results—often mapped as a 3D “response surface model”—identify which variable(s) affect the design the most, and which affect it the least. This information allows variables that are not important to be ignored in subsequent phases of the design process, or set to values that are most convenient or least costly.

Response surface model of a product’s design space computed from results of a DOE study. Image courtesy of Dr. Peter Hallschmid, School of Engineering, UBC Okanagan.

Response surface model of a product’s design space computed from results of a DOE study. Image courtesy of Dr. Peter Hallschmid, School of Engineering, UBC Okanagan.How can IIoT-gathered Big Data help? In setting up and carrying out a DOE study, the methods used to sample the product’s potential design space, and the confidence in the results delivered, are determined in part by how much is already known about the design being studied. Product performance information, collected in the enormous volumes promised from the IIoT, holds great potential to fill in many regions of the design space of an existing product with empirical data, thus giving higher confidence in exploring other regions of the design space for their performance parameters, and in the sensitivity and trade studies carried out on the DOE results. This will be invaluable for more efficiently and knowledgeably developing future product models and variants, as well as for refining a current model in production.

Better-Informed Robustness and Reliability Optimization via Data

Another area that will benefit from large volumes of empirical data about product performance is robustness-and-reliability optimization, aka stochastic optimization.

Product designs are nominal, while manufacturing and operating conditions are real-world. Finite geometric tolerances, variations in material properties, uncertainty in loading conditions and other variances encountered by a product in either production or use can cause it to perform differently from its nominal as-designed values. Therefore, robustness and reliability as design objectives beyond the nominal design are often desirable. Performance of robust and reliable designs is less affected by these variations, and remains at or above acceptable levels in all conditions.

To evaluate the robustness and reliability of a design during optimization, its variables and system inputs are made stochastic—that is, defined in terms of a mean value and a statistical distribution function. The resulting product performance is then measured in terms of a mean value and its variance.

Collected Big Data on a product’s in-field behavior can be of great benefit in revealing where to center the robust optimum design point, either for refinement of a model in service or for development of a follow-on product. The same holds true for collected data on manufacturing variations. Both hold potential to increase the certainty of stochastic optimizations, and to reduce the number of variations needing study, through knowledge of where the bounds of the actual variations in manufacturing or performance have been shown to lie.

Impact on Technology Providers

What will all this mean for technology providers? As Big Data optimization experts Richtárik and Qu pointed out: “Classical algorithms are not designed to scale to instances of this size and hence new approaches are needed. These approaches utilize novel algorithmic design involving tools such as distributed and parallel computing, randomization, asynchronicity, decomposition, sketching and streaming.”

Just as PLM (product lifecycle management) vendors are now engaged in sometimes radical overhauls of their product lines in face of the IIoT and Big Data revolution, developers of today’s design exploration and optimization software products—often designed specifically to help engineers draw reliable conclusions from sparse data sets—may elect to modify, or even re-architect, some of their products to make the most of the Big Data revolution, and to help their users do the same.

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

Bruce Jenkins is president of Ora Research (oraresearch.com), a research and advisory services firm focused on technology business strategy for 21st-century engineering practice.

Follow DE