Cloud HPC Rising to Meet New Workplace Demands

With many organizations redefining the future of work and collaboration, cloud-enabled teams and tools are increasingly the lifeblood of business continuity.

Latest News

October 7, 2021

Cloud: From business continuity to competitive advantage

IT professionals everywhere are pursuing cloud-first initiatives, accelerated by unprecedented changes in the workplace and advancements in cloud technologies. Adding additional urgency to the migration, stakeholders on the business side are ratcheting up the demand for capacity and the latest technologies to accommodate new use cases.

With job growth and hiring booming back to life in 2021, it’s no surprise that many industries are touting exciting milestones and aggressive release roadmaps. From the deep tech innovation happening in land and air travel to the computational capabilities that power that innovation, we are seeing an exciting boom in science and industrial R&D.

With many organizations redefining the future of work and collaboration, cloud-enabled teams and tools are increasingly the lifeblood of business continuity. IT and R&D teams who rely on High Performance Computing (HPC) to drive product innovation indicated that they believe 73% of their HPC workloads will be in the cloud in the next 5 years.

Historically, concerns around cost, security, and specialized use cases prevented some organizations from making the cloud leap or limited deployments to isolated workloads or burst compute needs. However this cloud acceptance arrived sooner than many expected: 2020 saw ‘extreme high growth’ of 78.8% in the HPC cloud segment, significantly outperforming expectations (Intersect360).

Rising to meet this growing demand, state of the art cloud HPC offerings like Rescale on Azure, featuring AMD EPYC™ Processors, are flipping the script to turn these former points of concern into points of differentiation.

Challenging common cloud HPC misconceptions

“Cloud HPC can’t handle my specialized use case.”

Cloud HPC has come a long way since early adopters started experimenting with their batch workloads run in the cloud nearly a decade ago.

Nowadays, cloud has all but transformed the modern enterprise with some specific workloads still remaining on-premises. In the domain of scientific and engineering research and development, the ability to perform massive data processing and specialized methods of simulation have been key differentiators. So, to keep their competitive edge, many leading organizations built specialized supercomputers tailored specifically for their use cases.

When cloud computing was first introduced, engineers and IT professionals alike could not imagine that cloud would ever contend with their homegrown data centers specifically built for computation that makes vehicles safer or airplanes more efficient.

As cloud service providers raced to provide the general purpose scale that enterprise resource planning (ERP) applications craved, curious HPC engineers stumbled to test their basic batch workloads in the cloud. They ran into issues running jobs that required multi-node HPC clusters, like computational fluid dynamics, and other common workloads used in aerospace and automotive use cases.

Regardless of cloud or on-premises deployments, spinning up a cluster for a specific use case requires knowledge of workload requirements, hardware types, proficiency with software licensing and installation, and understanding of command line and message passing interfaces.

Moreover, the cloud offers a plethora of hardware, storage, security, and network architectures that, to the untrained, can be overwhelming and challenging to deploy optimal and secure resources in the cloud. Additionally, early cloud infrastructure was not equipped for the high clock speeds, core counts, interconnect, and memory requirements of many workloads like computational chemistry, weather prediction, and seismic simulation.

Today, leading cloud providers offer a full arsenal of hardware types built specifically for varying HPC workload demands. Semiconductor and electronics design firms often maintain a quiver of compute types to accommodate the different stages of design verification. Similarly, life science organizations take advantage of cloud flexibility and choice to scale and toggle the GPU and CPU resources to conduct drug discovery with simulations like molecular dynamics and crystallization.

We are now at a phase of cloud maturity where HPC is not only possible across unique use cases, it’s actually enabling new ones. New discoveries and innovations are being born from cloud-native startups who can spin up the precise resources they need and shift at a moment’s notice. As the rate of new hardware release grows exponentially and organizations showing preference for newer hardware (Big Compute State of Cloud HPC Report), we expect to see HPC practitioners from all corners of industry flock to cloud for burgeoning use cases like machine learning, generative design, and digital twins.

“Cloud HPC is not economical.”

For nearly 30 years, the Top500 list has tracked the leading supercomputers, and in 2021, cloud native supercomputers made the list for the first time placing in the top 10% of systems. This pivotal milestone signals that cloud performance is ready for the big leagues. But, what about cost?

The cost of cloud computing has been a point of contention for many IT leaders who cringe every time they hear claims of “limitless compute.” HPC applications crave resources. On the business side, the more data that is processed, the more accurate the model will be and (likely) the better the finished product will be. Companies have always grappled with balancing business users’ demands with the allotted R&D funding.

Many HPC/IT administrators responsible for making HPC infrastructure decisions have often relied on the notion that on-premises out performs cloud on the basis of cost per core-hour. Modern cloud hardware economics are challenging commonly held cost assumptions and cloud providers have matured significantly in their overall support leading to better spend efficiency.

As we’ve discussed in The HPC Buyer’s Guide, there are many considerations for calculating hard and soft costs associated with HPC operations, but for the sake of a focused argument we can compare the cost-performance of cloud and on-prem infrastructure.

To maximize the economics of infrastructure decisions, Rescale gives organizations access to extensive and continuous cost-performance data. This data provides an index, Rescale Performance Index (RPI), to compare and choose from the ever-growing portfolio of compute hardware types. As many customers migrate from on-premises, Rescale also has benchmark data from those deployments.

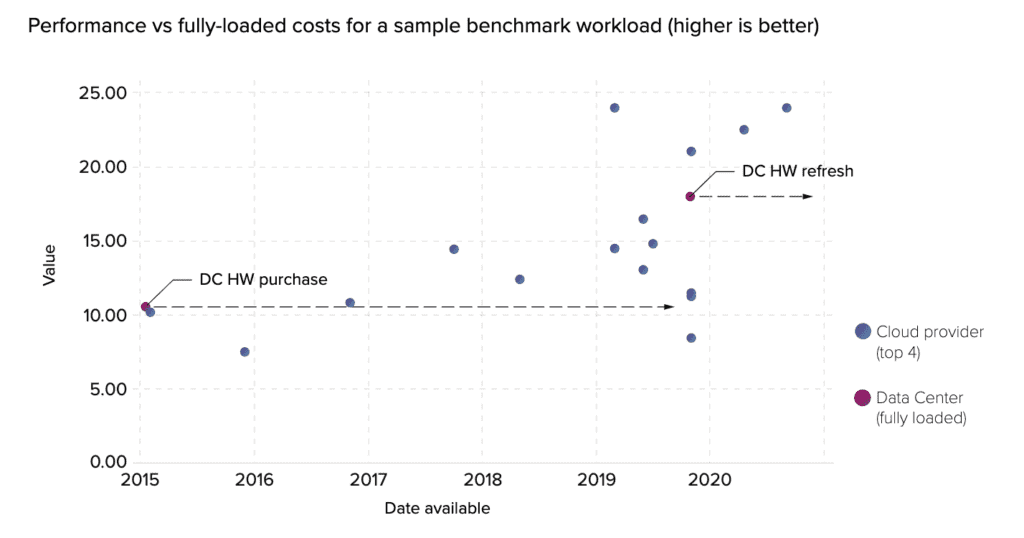

What we can observe in a plot of benchmarks of new hardware in the cloud and in the data center is the index of performance / cost (‘Value’ in the figure below) of new cloud hardware increases faster and more frequently than a fully-loaded data center install. Being able to continuously optimize, or shift to more performant hardware, can help organizations recapture 20% of their compute infrastructure costs (Intelligent Computing for Digital R&D).

When looking specifically at HPC hardware like AMD CPUs on Azure, we can note a positive trend in RPI, which means that practitioners can expect better performance while keeping costs constant. Because the cloud offers access to increased performance for the same cost each year, over time the total performance delivered from a fixed budget in the cloud exceeds the performance of a fixed on-prem cluster for the same total cost.

After looking at the pound for pound comparison hardware economics, we can now consider the other factors that can inflate HPC costs, namely licensing and staffing which significantly outweigh hardware costs for most organizations. With a sharp increase in demand for HPC expertise and talent in short supply, the feasibility of building and maintaining on-premises deployments is expected to diminish, further tipping the scale in favor of cloud hosted solutions.

“Cloud HPC is not secure.”

According to an eyebrow-raising datapoint shared recently by Gartner, “Public cloud IaaS workloads will experience 60% fewer security incidents than traditional data centers by 2020. And at least 95% of all security failures in the cloud will be caused by the customer.”

This may come as a surprise for those who believe that the cloud is riddled with vulnerabilities. Public cloud has, historically, generated concern and speculation among enterprise IT organizations, often enough to block cloud HPC migrations, especially for companies that have specific data regulations or compliance standards to adhere to.

These concerns are founded in a rational mistrust for infrastructure that is not physically under their IT supervision and enterprise-wide firewall and policies. Understandably, many bleeding edge HPC workloads involve crunching sensitive intellectual property and data governed by compliance standards like ITAR, FedRAMP, and NIST.

However, cloud service providers are eagerly working to change this narrative, and have the policies and instruments in place to demonstrate their efforts effectively and build customer trust. Rescale on Azure, featuring AMD EPYC™ Processors, offers a cloud high performance computing solution designed specifically for reliability, compliance, and security throughout the entire HPC stack.

Azure adheres to 90 compliance certifications across industries, countries, and regions, offering a resilient international foundation built with remote collaboration in mind. Up the stack, Rescale’s software-defined security enforces proper IP handling, and delivers encryption in transfer with high-grade TLS and multi-layered encryption at rest with 256-bit AES. To bolster existing cloud security standards, Rescale builds in additional safeguards to ensure workflows from end to end are secure and compliant with SOC 2 Type-2, CSA, ITAR, HIPAA, FedRAMP moderate, and GDPR.

As the global workforce grows more distributed, collaborative, and interdependent, we expect to see HPC initiatives and teams create new complexity for IT governance. Rescale is excited to see customers push the boundaries of innovation across internal departments and disciplines, and through partnerships like joint ventures and research consortiums (Tech Against Covid). For these purposes, Rescale provides organizations with the tools to easily manage teams and cleanly partition data to drive new insights and enable seamless collaboration without compromising sensitive data.

Rescale on Azure, featuring AMD EPYC™ Processors

As industry data points to continued rapid adoption of cloud HPC, the partnership between Rescale on Azure, featuring AMD EPYC™ Processors, remains committed to addressing traditional user concerns about cloud HPC through cutting-edge technological innovation. Those that challenge misconceptions about cloud HPC through adoption are realizing benefits that far outweigh historic scrutiny and perceived risk.

Rescale on Azure, featuring AMD EPYC™ Processors, is the cloud HPC solution built with simplicity in mind, to achieve new heights in scale and performance. For more information or inquiries, please contact [email protected].

Related Resource

Upcoming Webinar: October 13th at 12PM PT

Tune in to our upcoming webinar to see how teams are leveraging Rescale for turnkey access to Microsoft Azure’s new HBv3 virtual machines! Download Now!

This blog was authored and reviewed by Garrett VanLee (Product Marketing, Rescale), Andrew Jones ( Corporate Azure Engineering & Product Planning), Sean Kerr (Product Marketing, AMD)

More Rescale Coverage

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News