Latest News

June 1, 2018

Since Google first gained public attention for its self-driving car project a few years ago, autonomous vehicles (AVs) from a variety of manufacturers have been racking up road miles and media coverage at an ever-increasing clip. They’ve also eaten up a larger and larger amount of compute power, both in the vehicles and in the data centers used to support their design and monitoring—making AVs a key market for high performance computing (HPC) resources.

The push for self-driving cars has been driven by safety and economics. Both self-driving technology and related advanced driver assistance systems (ADAS) could help reduce the number of car crashes and related fatalities, the majority of which are caused by human error. Long-haul trucking, delivery, taxi and ride sharing companies also hope to reduce their reliance on human drivers, which can bring down operational costs.

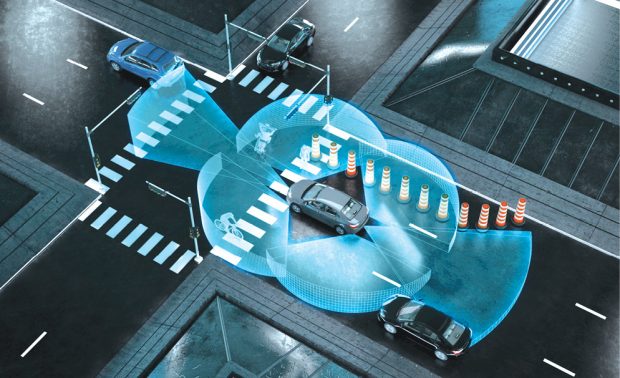

OPTIS, a virtual prototyping company, and LeddarTech Inc., the developer of digital signal processing technology used in automotive LiDARs, have partnered to enable the industrial simulation of advanced LiDAR solutions. Image: OPTIS/Leddartech

OPTIS, a virtual prototyping company, and LeddarTech Inc., the developer of digital signal processing technology used in automotive LiDARs, have partnered to enable the industrial simulation of advanced LiDAR solutions. Image: OPTIS/LeddartechSelf-driving vehicles could eventually reshape infrastructure and help to usher in smarter cities, fundamentally changing the way we live, according to some experts. But before we get to that point, AVs have to be designed—and that requires a massive amount of data. According to Intel, self-driving vehicles could generate more than 4TB of data to the cloud in less than two hours. Designing and testing these vehicles will also rely on significantly more compute power than traditional vehicles. Proving out the reliability and safety of an AV will require massive scenario generation, simulation and data analysis on an unprecedented scale for the auto industry. There is simply no realistic way to physically test these systems against every possible scenario without millions or billions of miles of data.

A Rand Corp. study found that it would take literally billions of miles of road testing to prove the safety of AVs. To put that in perspective, companies like Google spin-off Waymo and Uber have only road tested their own AVs a few million miles over the past five years. At the time of the study’s release in 2016, Rand senior scientist Nidhi Kalra said that it would be “nearly impossible for autonomous vehicles to log enough test-driving miles on the road to statistically demonstrate their safety, when compared to the rate at which injuries and fatalities occur in human-controlled cars and trucks.”

HPC Takes the Wheel

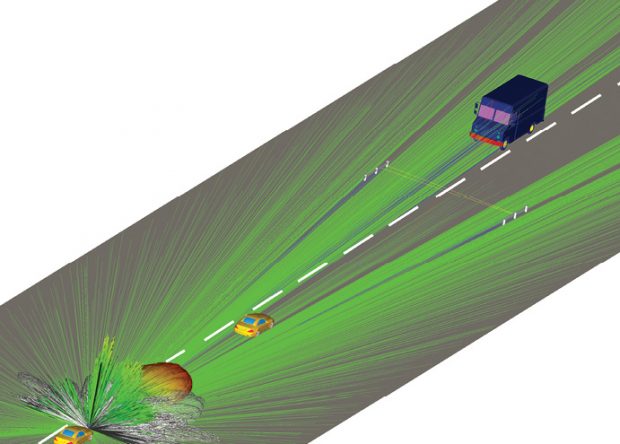

That means a greater reliance on simulation, but at a greater level of complexity. “Autonomous vehicles take system level simulation to a whole new level,” says Sandeep Sovani, director of the global automotive industry at ANSYS. “The system simulation has to include simulation of the sensors, electronics, software, actuators and other components. Even just depressing the brake pedal can have widespread effects on how the system performs. You have to simulate how all of those systems would respond to millions of situations the vehicle encounters on the road.”

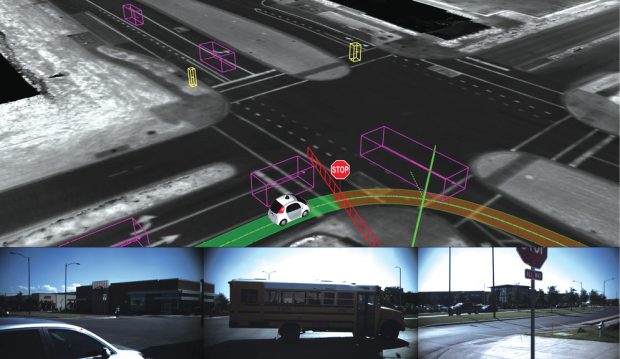

Simulations help autonomous vehicles “see” like humans would to avoid traffic and obstacles. Image: Waymo

Simulations help autonomous vehicles “see” like humans would to avoid traffic and obstacles. Image: WaymoAutonomous vehicles also have to mimic human responses, which means simulations have to include the idea of perception—does the AV “see” the world around it in the same way a human would?

That simulation effort will require a significant amount of HPC resources, which is why automakers are investing in their data centers. Ford, for example, has ponied up $200 million for a new data center to support its AV program, and Toyota has partnered with NTT Communications to build a global data center network.

“HPC computing is crucial for autonomous vehicles,” Sovani says. Those HPC resources can help companies run the closed-loop simulation tools needed to model these systems and model the millions of different environmental variations the cars will encounter.

Recently, Waymo gave the press and public a peak at its simulation solution for AVs, dubbed Carcraft. The company has 25,000 virtual AVs driving through 8 million miles of simulated roadways every day. The data generated by real world testing on the road and at a testing facility called Castle are fed into Carcraft, where problem scenarios can be created and extrapolated into thousands of variants. Once the self-driving vehicle in the simulator can successfully navigate those scenarios and thousands of variations based on them, the knowledge can be shared with Waymo’s entire self-driving vehicle network.

Massive Level of Simulation Complexity

That type of simulation on a massive scale is what will be required of every AV manufacturer. As autonomous vehicles move into Level 4 and Level 5 functionality—which will enable the vehicles to operate without a human driver (or even a steering apparatus) at all—they will rely on advanced deep learning, machining learning and artificial intelligence to travel safely down public roadways.

One challenge is that deep learning algorithms are programmed to “learn” single tasks, i.e., recognizing a yield sign. Recognizing each individual type of sign will require repeating the same level of learning. Developing an algorithm to recognize every type of road sign would require massive amounts of compute power and millions of images.

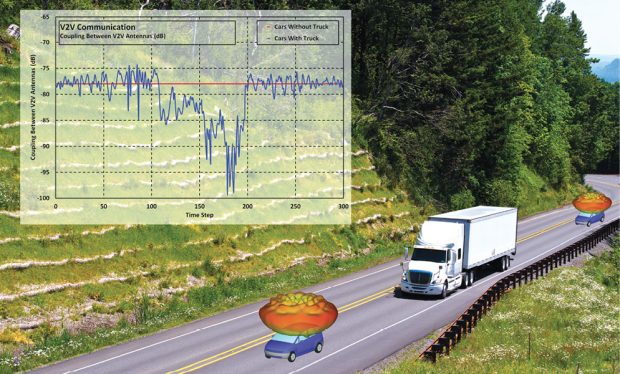

Communication technologies must be simulated to ensure reliable vehicle-to-vehicle communications. Image: ANSYS.

Communication technologies must be simulated to ensure reliable vehicle-to-vehicle communications. Image: ANSYS.Consider other types of driving conditions. A road crew working on a closed lane of traffic would require a vehicle to cross a double yellow line on the road (something the vehicle would otherwise be trained not to do), recognize a flagman, respond to oncoming traffic, and other functions that a human driver takes for granted but that would require a significant amount of programming and learning for an autonomous system to navigate.

Nissan refers to this as “socially acceptable driving,” and it will be very hard to achieve. “It’s a difficult problem to solve,” said Maarten Sierhuis at the Nissan Research Center Silicon Valley, speaking at the HPC User Forum in Milwaukee last year. “This is human decision making, and we want cars that understand or maybe make decisions like a human. We try to mimic this human-like decision making. We have a group of social scientists that are doing this research, and trying to understand what human-like driving is all about. They are coming up with concepts like creeping, like piggy-backing—theses kinds of concepts that we as humans are not even aware of that we are doing when we are driving.”

In part because of the difficulty of anticipating and simulating all of the possible scenarios, Nissan developed its Seamless Autonomous Mobility (SAM) concept. SAM relies on human intervention to help self-driving vehicles navigate particularly tricky scenarios, similar to having an air traffic controller for its AVs. Once a human mobility manager guides a vehicle through a trouble spot, the solution can be communicated to all other AVs via the cloud so they safely navigate the same scenario without human intervention.

The idea that AVs can share knowledge is gaining traction in the industry, and the data crunching behind those efforts will present another opportunity in the HPC space. Intel’s vision for AVs also includes “learning” via the collective experience of millions of cars. Intel’s technology powers Waymo’s vehicles, and the company purchased Mobileye last year. That company provides vision and machine learning, data analysis and mapping technology for AVs and ADAS solutions.

Industry Initiatives Abound

One initiative the combined Intel/Mobileye organization has already launched is a mapping program. The Mobileye 8 Connection collision avoidance system is being deployed in thousands of ride-share vehicles. The solution will harvest street mapping data to create high-definition crowdsourced maps via the Mobileye Road Experience Management solution. This type of map and infrastructure data will be critical for full autonomy.

Simulation tools are also emerging and evolving to meet the needs of autonomous vehicle development. At ANSYS, the company’s Simplorer systems-level simulation tool can be used for model-based systems engineering in the AV space, and the company’s Engineering Knowledge Manager can integrate different types of simulations and share data across departments.

French company OPTIS has developed light and sensor simulation systems targeted specifically at vehicles. The company expects the number of sensors in vehicles to exceed 30 by 2022. Vehicle designers can use simulation to test the performance of the LiDAR, radar and camera systems in the vehicle. The company recently formed a partnership with LiDAR provider LeddarTech to advance the development of self-driving vehicles. The OPTIS tool can validate the LiDAR model and simulate the correct response in real time via a virtual closed-loop simulation.

Microsoft has expanded its AirSim offering—originally developed for testing the safety of AI systems in drones—to the autonomous vehicle space. The open system includes APIs that can help new users create their own simulation tools for research purposes. In the UK, the University of Leeds Driving Simulator (UoLDS) is being leveraged for the HumanDrive project, an Innovate UK initiative to develop a self-driving car with human-like, natural controls by 2020.

Simulation will ultimately reduce development times and cost for these AV systems, but the scale of these simulations is on a new order of magnitude for the automotive industry—Waymo’s massive Carcraft effort will have to be replicated by other manufacturers.

Multidisciplinary testing will also be critical, given the effect that different vehicle system have on each other during different types of driving conditions. Again, the need for large amounts of computer power both on the vehicle and for data analysis present an opportunity for HPC providers.

Looking Ahead

And once these vehicles are on the road in large numbers, there will need to be a network of data centers and microdata centers in place to receive data from them and feed information to them. There will also need to be much greater computing power in the vehicles themselves. Intel estimates that self-driving cars will process as much as 1G of data every second in order to respond quickly to driving conditions.

Simulation tools need to continue to evolve to meet the needs of the autonomous vehicle industry, including increasingly complex driving scenarios. One important tool that carmakers will need is a way to automatically and efficiently create virtual environments for the simulations.

“Level 4 and 5 vehicles need to be simulated in virtual worlds where they can encounter a wide variety of situations,” Sovani says. “There are thousands of types of intersections, and you have to include different road topologies, pedestrians, and objects that you would find around a city. Right now, that’s done manually and can take months to create a model of a large urban intersection. We need a way to create these worlds using computational tools.”

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

Brian Albright is the editorial director of Digital Engineering. Contact him at [email protected].

Follow DE

Automakers use simulation software to develop advanced driver assistance systems. Image: ANSYS

Automakers use simulation software to develop advanced driver assistance systems. Image: ANSYS