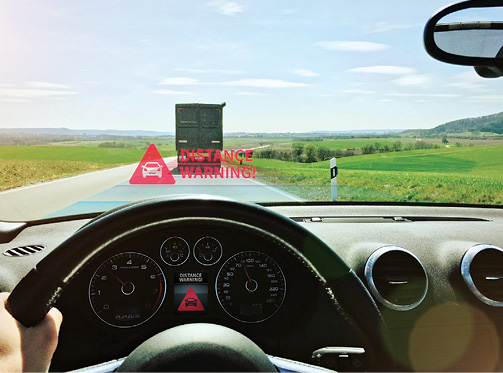

In this image, radar and lidar work together to determine safe driving distances between vehicles. The autonomous driving system leverages radar’s ability to “see” long distances and function in bad weather. Lidar, on the other hand, provides a more comprehensive picture of the vehicle’s surroundings. Use of these two sensors provides redundancy and precludes catastrophic failure. Image courtesy of Infineon Technologies.

Latest News

December 1, 2017

Today, human error causes nearly 96% of all motor vehicle collisions. Automotive engineers hope to dramatically change that with the development of the autonomous car. Once thought to be the stuff of science fiction, these vehicles will likely begin to appear on the highways three years from now. But, a lot must happen before the driverless car goes mainstream.

Perhaps the greatest challenge lies in the fact that driverless vehicles must have sensing and control systems that exceed the capabilities of human drivers. To understand the environment in which they operate, autonomous cars will rely on a combination of sensors, which will include ultrasonic, image, radar and lidar sensors. Each sensor technology will add a layer of information, which—when combined with the information provided by the other sensors—will create a detailed picture of the vehicle’s surroundings.

Top: The latest manifestation of the digital revolution, the autonomous car, stands poised at the verge of leaving the research lab and entering the mainstream. This mind-boggling advance represents the latest product of the union of sensors, communications, processors and software. Image courtesy of Melexis.

Top: The latest manifestation of the digital revolution, the autonomous car, stands poised at the verge of leaving the research lab and entering the mainstream. This mind-boggling advance represents the latest product of the union of sensors, communications, processors and software. Image courtesy of Melexis.

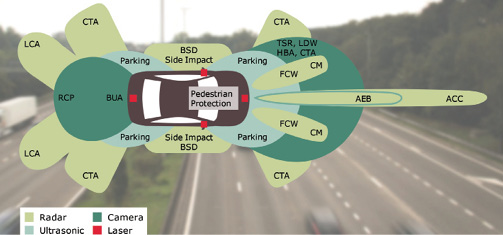

Above: Some autonomous vehicle designs include as many as 10 radar sensors, together with camera, laser (Lidar) and ultrasonic systems. Working together, these sensing systems create a detailed map of the vehicle’s surroundings, ensuring safety and enabling navigation. Image courtesy of Infineon Technologies.

Together, these sensing devices will digitally map all relevant elements of the vehicle’s operating environment, enabling it to navigate highway systems, detect obstacles, conform to traffic laws and determine the car’s response to fast-developing problems. “To create a viable automated car, it is important to recreate reliable judgment,” says Vik Patel, segment marketing manager at Infineon Technologies. “Here, sensors play a key role by replacing all of the driver’s senses. The complexity and reliability of human perception can only be recreated with technology if multiple, diverse sensors detect various aspects of the environment simultaneously.”

Sensing the driving environment, however, represents only part of the challenge. Autonomous driving systems must be able to convert sensor data into actionable information fast enough to allow the control system to react to real-time situations. This will require substantial enhancement of processing capabilities across the sense, compute and actuate function blocks of vehicles. Image sensors and other sensing subsystems significantly increase the processors’ workloads, and system complexity will increase exponentially to achieve the level of functionality and dependability required by autonomous vehicles.

Sounding Out Obstacles

The sensors that will enable autonomous driving vary greatly in complexity and functionality. Perhaps the simplest sensing device used in this application is the ultrasonic sensor. These sensors transmit a high-frequency sound pulse and then measure the length of time it takes for the echo of the sound to reflect back.

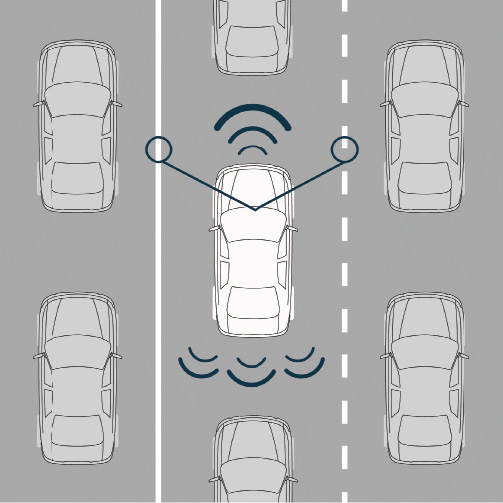

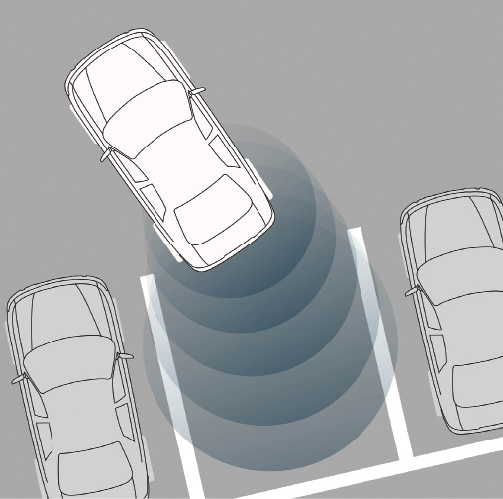

Ultrasonic sensors enable the autonomous car to perform slow-speed, precision maneuvers, such as (top image) moving through busy city streets and (bottom image) parking. Although they are effective only at speeds less than 10 mph, ultrasonic sensors deliver detection precision measured in centimeters. Image courtesy of Melexis.

Ultrasonic sensors enable the autonomous car to perform slow-speed, precision maneuvers, such as (top image) moving through busy city streets and (bottom image) parking. Although they are effective only at speeds less than 10 mph, ultrasonic sensors deliver detection precision measured in centimeters. Image courtesy of Melexis.Ultrasonic sensors facilitate parking and slow-speed navigation in congested spaces. For example, when moving around in a parking garage or through busy city streets, these devices enable the vehicle to maneuver adroitly in dense traffic and through narrow spaces.

The sensors’ strength lies in their ability to measure distance to objects with great precision. “No other sensor can measure short distances down to a few centimeters while having at the same time a medium-range capability of about 7 meters,” says Peter Riendeau, project manager for development at Melexis.

Their weakness is the slow speed of their signals (sound waves) and the relatively slow update rate. As a result, they perform well only at speeds of less than 10 mph. Another limitation is their performance in bad weather. Buildups of ice and snow on the face of the sensor attenuate its signals.

Despite these flaws, ultrasonic sensors provide a simple, low-cost alternative to more sophisticated systems. In addition, they can work with other types of sensors, such as radar and lidar, which do not provide measurement resolution at close range.

Adding Vision

Image sensors add another dimension of awareness to the autonomous car, performing a role similar to that of the driver’s eyesight. These devices can detect color, shapes, textures, contrast and fonts, enabling the control system to interpret traffic signs, traffic lights and lane markings, as well as detect and classify objects.

Specialized camera systems can extend the car’s “perception” even further. For example, stereo cameras can provide 3D vision, facilitating range determination. And sensors with infrared light sources can “see” greater distances at night without resorting to high-brightness, visible-wavelength light sources, which can blind oncoming drivers.

Another advance in vision sensing can be found in the time-of-flight (ToF)-based image sensor, which measures the time it takes for the infrared light to go from the camera to the object and back. “This time relay is directly related to the distance of the object,” says Patel. “Compared to other 3D measurement methods, with ToF, the depth data is measured directly and doesn’t need to be determined via complex algorithms.

Despite these advances, vision technology still needs improvements in certain areas. For example, the range of most cameras falls short. The “sight” of these sensors should be extended to 250 meters to enable more anticipatory driving. In addition, adverse weather conditions and lighting extremes can cripple image sensors. Compounding these problems, as the resolution of these sensors increases, so does the need for greater levels of processing. This in turn increases operating costs and power consumption.

Finally, recognition algorithms must be improved. An example of this shortcoming can be seen in the fact that the software recognizes pedestrians only 95% of the time, which is bad news for the remaining 5%.

In addition to these factors, design engineers should also be mindful of bus speeds and the network’s ability to handle the large quantities of data generated by cameras. “Using vision sensors implies that the data stream will need to reach the processing resources with as little delay as possible,” says Riendeau. “Currently, automotive bus speeds are far behind those of typical office data networks. Deploying a high-speed digital data bus in a vehicle is incredibly challenging due to the many noise sources present. The tradeoff between preprocessing signals at the sensor and having raw signals for analysis at the control system is a challenge for autonomous driving system architects.”

Radio Detection and Ranging

Another sensor that plays a key role in the autonomous car is radar, a sensor that has a long history with the automotive industry. These sensors determine the location, speed and trajectory of objects around the vehicle by measuring the time that elapses between transmitting a radio pulse and receiving the echo.

Radar sensors come in short-, medium- and long-range models, supporting ranges of 0.2-30 meters, 30-80 meters and 80-200 meters, respectively. In the autonomous driving application, deploying short- and long-range radars all around the vehicle enables the system to track the speed of other vehicles in real time and provide redundancy for other sensors.

Radar outperforms cameras and lidar in bad weather, but it has less angular accuracy. In addition, radar generates less data than cameras and lidar. This is good and bad. It means that reduced demands are made on the vehicle’s bus, but it also means the system has less information upon which to base its decisions.

Unique among all the sensors used in autonomous cars, radar does not require line of sight because radio waves are reflective. This means the sensor can use reflections to see behind obstacles.

Although radar offers significant advantages, growing safety and reliability requirements highlight areas of radar technology that need improvement. For instance, 2D radars cannot determine an object’s height because the sensor only scans horizontally. This deficiency becomes relevant when the vehicle passes under a bridge or enters a parking garage with a low entryway. 3D radars will remedy this shortcoming, but they are still being developed.

Mapping the Operating Environment

One of the newer sensing technologies used in autonomous cars is lidar, or light direction and ranging. This technology uses ultraviolet, visible or near infrared light to image objects, determining the distance between the autonomous vehicle and an object by measuring the time it takes for the light to travel from the sensor to the object and back again. Lidar sensors deployed in automotive systems typically use 905 nm-wavelength lasers that can provide ranges of 200 meters in restricted fields of view, enabling the sensor to generate a detailed 3D map of the vehicle’s surroundings. Some companies even offer 1550 nm lidar, which delivers greater range and accuracy.

This mapping process generates huge amounts of data, delivering significant benefits. “The sensor finely and rapidly slices the world around the vehicle, generating between 300K to 2.2M data points a second,” says Anand Gopalan, CTO of Velodyne. “This rich data set can be used to perform a variety of functions critical to autonomy, such as generating a high-definition 3D map of the [vehicle’s] world, localizing the vehicle in real time within this map, and sensing, identifying, classifying and avoiding obstacles.”

The downside of creating such large amounts of data is that it requires autonomous vehicles to have very powerful processors to handle the information. This raises the cost of designs and taxes the vehicle’s communications bus, potentially compromising real-time decision-making.

In this image, radar and lidar work together to determine safe driving distances between vehicles. The autonomous driving system leverages radar’s ability to “see” long distances and function in bad weather. Lidar, on the other hand, provides a more comprehensive picture of the vehicle’s surroundings. Use of these two sensors provides redundancy and precludes catastrophic failure. Image courtesy of Infineon Technologies.

In this image, radar and lidar work together to determine safe driving distances between vehicles. The autonomous driving system leverages radar’s ability to “see” long distances and function in bad weather. Lidar, on the other hand, provides a more comprehensive picture of the vehicle’s surroundings. Use of these two sensors provides redundancy and precludes catastrophic failure. Image courtesy of Infineon Technologies.This impressive data collection ability is not hindered by harsh lighting conditions, as is often the case with other sensors, like cameras. Lidar sensors mitigate the effects of ambient light extremes by using optical filters. The filters not only counter sensitivity to harsh lighting but also prevent spoofing from other lidars.

Despite its many strengths, this sensor technology faces serious hurdles that preclude it from meeting critical criteria required for autonomous driving. One of the automotive industry’s most pressing challenges lies in the development and manufacture of automotive-grade, solid-state lidars for mass-market vehicles.

“The lidars on the market today, which are used in AD [autonomous driving] prototype developments, are quite simply incapable of meeting the requirements for mass-market AD deployments,” says Pier-Olivier Hamel, product manager, automotive solutions, at LeddarTech.

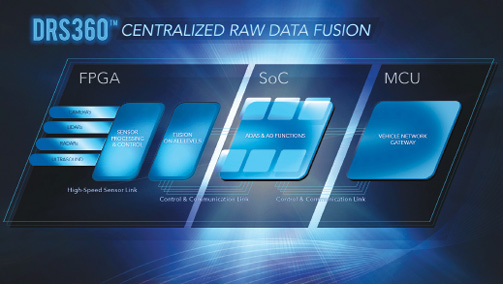

A centralized architecture like Mentor Graphics’ DRS360 autonomous driving platform connects raw sensor data directly to a centralized compute module. This approach promises to better preserve the integrity of sensor data and complete the fusion process with little or no latency. Image courtesy of Mentor Graphics.

A centralized architecture like Mentor Graphics’ DRS360 autonomous driving platform connects raw sensor data directly to a centralized compute module. This approach promises to better preserve the integrity of sensor data and complete the fusion process with little or no latency. Image courtesy of Mentor Graphics.The factors behind this challenge can be seen by examining the features of the two main categories of lidar sensors. The first type, flash, or solid-state, lidar, uses a fixed, diffuse beam, and the technology is low-cost, small and robust. Its range and resolution, however, have historically been limited, which significantly restricts the technology’s usefulness for autonomous vehicles. The second type, mechanical scanning lidar, is powerful, using collimated laser beams to scan up to 360° around the outside of a car. The drawbacks with this type of lidar are that its complex design makes it difficult to manufacture and maintain, and its price is prohibitive for mass-market deployment.

Recently, however, some design teams have begun to shift from using mechanical scanning lidar to solid-state lidar. Solid-state sensors currently have lower field-of-view coverage, but their lower cost may open the door for using multiple sensors to cover a larger area.

One approach that promises to resolve the cost/performance dilemma is to use signal processing algorithms to acquire, sequence and digitally process light signals to improve the sensitivity of solid-state lidar’s immunity to noise and data-extraction capabilities.

Complementary Data Streams

One of the fundamental concepts of autonomous car designs is that one sensor can’t be good at everything. Some excel at performing some tasks, but are inappropriate for other tasks. “Autonomous driving technology requires a comprehensive suite of sensors to collect all necessary information needed to recreate a vehicle’s environment,” says Hamel. “This advanced ‘artificial vision’ enables the vehicle to navigate safely and perform required decision-making while en route to a destination.”

But this reliable decision-making does not require unanimous agreement among the various sensors. “Based on collected information from camera, radar, lidar and also ultrasonic systems, a car can make a so-called ‘two-out-of-three’ decision,” says Patel. “If two out of three values coincide, this value is interpreted as being correct and is then further processed. The different characteristics of each sensor technology increase safety by providing complementary data.”

A centralized architecture like Mentor Graphics’ DRS360 autonomous driving platform connects raw sensor data directly to a centralized compute module. This approach promises to better preserve the integrity of sensor data and complete the fusion process with little or no latency. Image courtesy of Mentor Graphics.

A centralized architecture like Mentor Graphics’ DRS360 autonomous driving platform connects raw sensor data directly to a centralized compute module. This approach promises to better preserve the integrity of sensor data and complete the fusion process with little or no latency. Image courtesy of Mentor Graphics.The complementary data streams also prevent system-wide failure. “The different spectrums being applied by each technology make their data streams complementary, but not strictly redundant,” says Riendeau. “The goal is to have multiple sensing technologies that can provide the system with ‘graceful degradation’ in performance. This means the car’s overall sensing system has the ability to maintain limited functionality even when a significant portion of it has been compromised. Essentially, graceful degradation prevents catastrophic failure … Instead, the operating efficiency or speed declines gradually as the number of failing components grows.”

Making the Leap from Sensing to Control

Achieving the right mix of sensors and optimizing their performance for autonomous driving is important, but this application also requires that the system analyze sensor data and react to even the most complex driving scenarios in real time. This is where sensor fusion comes into play. The catch here is that the data processing where fusion occurs must be tailored for minimal latency.

The sensors enabling autonomous driving generate massive amounts of data. “The challenge here involves capturing all these data in real time, pulling it together to create a full model of the vehicle’s environment, and then having the capability to process the data in real time while adhering to the stringent safety and power requirements of the automotive market,” says Amin Kashi, director of ADAS and autonomous driving at Mentor Graphics.

These demands go a long way in determining the processing and communications resources required by autonomous driving. “To achieve a fast decision time, you need the right data at the right time—high-speed network, with low latency—and your computer must be able to process in parallel a significant amount of data,” says Marc Osajda, business development manager for MEMS sensors at NXP.

To meet these requirements, development teams have been looking at two approaches. In the first, all data processing and decision-making occurs at a single, centralized location in the vehicle, working with raw, or unprocessed, data provided by the various sensors. The second is a distributed system, where preprocessing occurs locally at the sensor node before “data objects” are passed downstream, where sensor fusion takes place.

In the centralized approach, the sensors send all the data they capture—unprocessed—directly to the central computer for fusion and decision-making. This architecture promises to better preserve the integrity of sensor data by providing the central computer with all the data available to make the appropriate decision. “In raw data systems, all captured data is fused in its ‘raw’ state, meaning you preserve the data fully intact and then fuse that raw data in time and space,” says Kashi. “Afterwards, raw data systems can process only the portions of these data that are relevant to each unique situation and required by each specific application.”

There is, however, a downside to this approach. “The drawback of such a configuration is that the central computer must manipulate and analyze a lot of data, and then you also need a rather powerful and large-bandwidth communication link between the sensors and the central computer to transfer all the raw data,” says Osajda.

On the other hand, in a distributed architecture, microcontrollers at the sensor node pre-process the raw data so that only the most relevant information [objects] is passed on to the central processor for decision making. “This architecture requires more computing power at the sensor nodes and can eventually introduce some latency in the decision process,” says Osajda. “However, the benefit is that you don’t overload the central computer with a huge amount of data. The choice really depends on the capabilities of your sensors, the bandwidth of your network and the processing capability of your central brain.”

More Info

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News