More than a Question of Energy

Performance, power consumption, and cooling make up a complex stew, leaving the search for energy efficiency in HPC systems with no simple recipe for success.

Latest News

June 30, 2009

By Tom Kevan

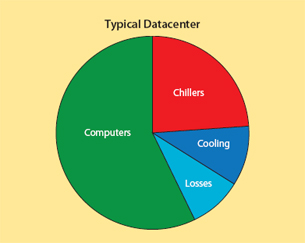

Figure 1: In a typical datacenter, just 50% to 60% of the power entering the datacenter goes to computers and processors while 30% to 35% goes to chillers, computer-room AC units (typically only 60% to 80% efficient), and cooling motors, fans, and pumps. Electrical losses due to inefficiency and AC to DC conversion account for 10% to 15% of power. Chart courtesy of Cray, Inc. |

If you’re looking for simple, straightforward guidelines on the use of technologies, designs, and best practices to achieve energy efficiency in high-performance computing (HPC) systems, forget it. Answers to this question rapidly become a confusing swirl of what often appear to be conflicting goals and attributes. Performance, parallelization, power consumption, and cooling are critical qualities screaming for balance. Unfortunately, the right balance varies with each application.

A Question of Balance

Begin by recognizing that performance is the holy grail of HPC. The ultimate goal is to attain the ability to do the computing work—to solve often large, complex problems—as quickly and accurately as possible. Unfortunately, there isn’t one design approach that’s right for all situations.

Now add to the mix the search for energy efficiency. One approach to reducing power consumption has been to reduce the voltage and frequency of the processors.

Four or five years ago, you had single-core processors that operated at 3GHz. Those ran very hot and consumed considerable energy. Now HPC clusters use dual-core or quad-core processors, and an average system has hundreds of cores, with the largest systems having more than 150,000 cores.

If you look at the frequency of each of the cores, however, it may be half the 3 GHz found in the older systems. That saves energy because you are tuning back each processor core. So it looks as if you are improving performance and saving energy.

Here’s the catch. To do the same job, you are using more processors. So even with the cores running at the reduced frequency, you are using more energy. The paradox is that while clusters are becoming more energy efficient, in some ways they are moving away from energy efficiency.

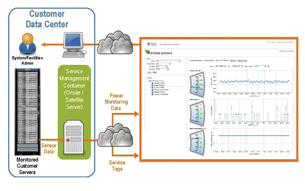

Figure 2: The Sun Intelligent Power Monitoring Service makes it possible for customers to improve power efficiencies via monitoring, reporting, and forecasting energy consumption. This subscription-based network service provides direct visibility via a Web interface. Image courtesy of Sun Microsystems, Inc. |

Now think about what you have just done from a software standpoint. You have increased the parallelism of the system. You’ve just moved from the one-way parallelism of three years ago to four-way parallelism with today’s quad-core processors. Don’t forget that a lot of engineering software is written for single-core processors. Only some of the applications are easy to parallelize—meaning they can easily be subdivided into lots of independent small problems. If the commercial software you’re using doesn’t easily parallelize, you may not be able to do the job any faster, and you’re using more energy. So you really need to balance performance and power consumption not in the abstract, but in the applications you are trying to run.

Start with the Source

What better place to begin effecting energy efficiency than with the power supply and distribution system. In many ways, this is the locus of the greatest energy loss and the place where the most significant improvement can be made. To appreciate the scale of the problem, consider the Power Usage Effectiveness (PUE) metric, a measure of the energy efficiency of a data center calculated by dividing the amount of energy entering a data center by the power used to run its computer infrastructure (see Figure 1).

According to several surveys, the average PUE of U.S. data centers is probably somewhere between 2 and 2.4. That means that if you have 2W going into the data center to power everything—the equipment, chillers, cooling towers, pumps, and UPSs—only 1W is going to the IT equipment. So half the energy is used before it gets to the computing systems. Then go one step further and ask: Of the 1W going to the IT equipment, how much is used to provide bits and bytes? The utilization rates of x86 architectures are between 5% and 12%. So if that 1W goes into the rack and you are getting only 5% utilization, only 0.05W is used to perform computing.

You can counter this energy loss and achieve greater efficiency by improving the power supply and distribution system on two levels. The first is the process of converting alternating current to direct current. The second is in the distribution of power within the HPC system itself.

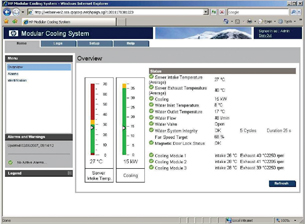

Figure 3: The Hewlett-Packard Modular Cooling System uses fans and air-to-liquid cooling heat exchangers to remove heat. The system is used in datacenters to eliminate hot spots without revamping the overall architecture. Image courtesy of Hewlett-Packard |

You may have 13–15 kVA coming into the building, which you have to reduce so it can be used by the chips (about 1 V). The more steps you take in this process, the greater the energy loss through waste. So you reduce the rectification steps by bringing the high voltage down to only 480VAC, which many HPC clusters and computing racks can accommodate. In doing so, “you improve energy efficiency by 2 percent to 3 percent,” says Roger Schmidt, IBM distinguished engineer.

Another way of saving energy at this level is to use high-quality equipment. “There are high-efficiency uninterruptible power supply systems, power distribution units, and transformers on the market,” says Schmidt. “You can gain several percentage points by buying the better products on the market.”

Next, you can improve energy efficiency by managing the distribution of power within the HPC system itself. A range of hardware and software tools can help you achieve this on multiple levels (see Figure 2).

“There are tools now in place on some machines that allow you to go in and actually limit the power drawn by the computer,” says Schmidt.

HPC system manufacturers are also beginning to implement power-management features that identify components that are not doing any work or processing and turn them off or put them in sleep mode. “Any workload requires the operation of only some of the components at any one time,” says Giri Chukkapalli, HPC systems architect at Sun Microsystems. “So this fine-grain power management is critical. Well-integrated hardware and software is the key going forward.”

Because HPC systems can have multiple power supplies within a system, it’s also important to balance loading. HP uses an onboad microprocessor to do this. “A microprocessor we call the onboard administrator manages the loading of power,” says Ed Turkel, manager of the High-Performance Computing Product and Technology Group at Hewlett-Packard. “It will actually distribute the power load across the multiple power supplies in a way that keeps them high up in their efficiency curve.”

Additions and Subtractions

You can wring out even more energy efficiency by choosing a system in which the vendor has added extra components on the one hand and eliminated unnecessary elements on the other. Again, you are creating a balance based on the requirements of your application.

“We have an engineering team that works with the major ISVs, and they spend a lot of time with each new system generation, simply seeing how all the standard applications run in that environment,” says Hewlett-Packard’s Turkel.

A recent trend is the use of alternative processors, which in the case of HPC systems include general-purpose graphical processing units (GPGPUs) and specialized ASICs. At the moment, the alternative processors do not operate independently. They are tied to the x86 processors and function as coprocessors used to perform in PCs.

The primary advantage of using an alternative processor is accelerating the execution of the job. Overall, the processors can either make the job run faster or make it run with less power. With GPGPUs, you are really looking for a significant increase in performance that outweighs any increase in power used by the GPGPU.

“When GPGPUs get to their highest levels of performance, they are power hungry themselves,” says Turkel. “They are drawing similar levels of power as the microprocessors themselves—in many instances, even more. So you don’t automatically save power by using them, but you may be speeding up the application significantly.”

But don’t expect alternative processors to deliver their full advantage for a while. “The software to program the accelerators is still in early stages,” says Sun’s Chukkapalli. “The libraries required to take advantage of accelerators are in the early stages. We are quite far away from an optimized situation.”

On the other hand, you can reduce power consumption by eliminating unnecessary components and subsystems. “One of the aspects of power efficiency is simply not overloading the node with components that are not going to be used or that are not important for the application,” says Turkel. “If you look at our ProLiant double-dense blade that we introduced last year, we’ve made some compromises. Instead of having two rotating disks, it has one. Instead of having a larger memory complement, it has fewer DIMM slots.”

Cooling Technology

Because HPC cooling consumes large amounts of energy, the area is another prime place to reduce power consumption (see Figure 3). Generally speaking, there are two main cooling approaches: air and liquid. Liquid can be water or an inert substance. Smaller HPC systems primarily use air, while a growing number of larger systems are turning to liquid.

Cooling is achieved on multiple levels—the chip, board, cabinet, and room. HPC systems generate heat within the cabinets, and the heat is exhausted into the room; a fairly inefficient way to do it. Conventional wisdom is that the closer the cooling mechanism is to the origin of the heat, the more effective it is.

Liquid—water in particular—has much better heat transfer characteristics than air. By mounting cold plates using liquid cooling on top of processor modules and on the back of cabinets, you can effectively eliminate the heat before it circulates into the room. The lower temperatures allow you to raise the frequency of the processor, increasing the system’s performance and improving the energy efficiency of the data center.

Compared to air-cooling technology, says IBM’s Schmidt, you can realize about a 40 percent energy reduction using liquid. “If you look at the total load of the data center—air-cooled vs. water-cooled—you will save something like 10 percent up to 20 percent of the total energy in the data center,” he says. “That includes the IT load and the infrastructure support.”

Incremental Improvements

Working with your hardware vendor, you can improve HPC system energy efficiency on multiple levels, with a range of standard technologies. Effective techniques always factor in your application requirements.

Tweak performance at the microprocessor and system levels, using design best practices. Eliminate unnecessary components and subsystems. And make sure the system you select implements high-performance liquid cooling and energy management tools. All of that translates into better performance per watt.

More Info:

Cray

Seattle, WA

IBM

Armonk, NY

IDC

Framingham, MA

Hewlett-Packard

Palo Alto, CA

Sun Microsystems

Santa Clara, CA

Tom Kevan is a New Hampshire-based freelance writer specializing in technology. Send your comments about this article to [email protected].

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

DE’s editors contribute news and new product announcements to Digital Engineering.

Press releases may be sent to them via [email protected].