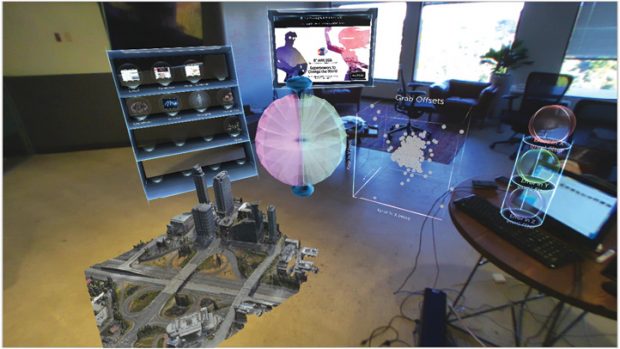

A snapshot of the hologram-augmented view, as seen inside Meta’s AR environment. Image courtesy of Meta.

Latest News

August 1, 2017

At the age of seven, Ryan Pamplin discovered he needed to wear glasses. Whereas other kids might bemoan the nerdy look, Pamplin instead began dreaming of one day combining his glasses with his other favorite toy—the computer. In a way, he helped make his own dream come true.

“I’m now sitting in my office wearing my Meta glasses,” he explains. “There’s a Polaroid-style picture of my girlfriend and [me] floating around me. I’ve got a virtual monitor floating above my desk. Next to me, I have a skeleton I can fully dissect. Oh, and a little virtual campfire I keep under my desk. I jokingly tell people it keeps me warm.”

The virtual campfire may be nothing more than a giggle-inducing gimmick made of pixels, but the virtual monitor and skeleton are fully functional. “I run my Windows and Mac applications on the monitor. And I can pull apart the skeleton, read the annotations and learn about body parts and muscle groups the way I never could from a textbook,” says Pamplin.

A snapshot of the hologram-augmented view, as seen inside Meta’s AR environment. Image courtesy of Meta.

A snapshot of the hologram-augmented view, as seen inside Meta’s AR environment. Image courtesy of Meta.Pamplin, VP and technology evangelist at Meta, believes this is how most people in the near future would prefer to work, live and socialize—in an augmented reality (AR) where the distinction between digital and physical objects is not always clear.

It’s a vision shared by graphics processing unit (GPU) maker NVIDIA, enterprise software maker IFS and CAD software giants Autodesk and Dassault Systèmes, among others. At this year’s GPU Technology Conference (May 2017, The Venetian, Las Vegas, NV), NVIDIA Chief Executive Officer Jensen Huang introduced the Holodeck, a virtual reality (VR) setup for design collaboration, inspired by the reality-simulator depicted in the popular sci-fi TV show “Star Trek.”

What would CAD, product lifecycle management (PLM) or enterprise resource planning (ERP) look like in the age of AR-VR? The projects under way today offer some clues. But to boldly go where AR-VR applications have never gone before, they must confront the legacies from the mouse-and-keyboard era.

Meta projects holographic objects into its eyewear to enable AR-based workflows. Image courtesy of Meta.

Meta projects holographic objects into its eyewear to enable AR-based workflows. Image courtesy of Meta.Augmented Reality: A Natural Way to Interact with 3D

In February, at the annual Dassault Systèmes SOLIDWORKS user conference SOLIDWORKS World, SOLIDWORKS CEO Gian Paolo Bassi announced the partnership between its firm and Meta. “Meta designed its device with 3D holographs instead of flat screens,” said Bassi in his onstage keynote address.

Meta writes, “The headset displays holograms and digital content, comes with a software development kit (SDK) built on top of Unity (the most popular 3D engine in the world) and includes Workspace, Meta’s new AR operating environment that has been built based on our AR design guidelines.”

A notable feature of the Meta device is what Meta CEO Meron Gribetz describes as “gestural computing.” The headgear is equipped with implanted reality-based depth sensors, two cameras pointing out and a pair of six-axis IMUs (inertial measurement units). This allows the device to monitor your hand position and correlate it to the 3D holographic space. With this setup, you can grab, hold, move and rotate 3D holographic models with natural motion, as though you’re interacting with something tangible floating mid-air.

Enterprise software maker IFS envisions using AR to let field crew, technicians and maintenance workers automatically identify assets and review relevant data. Image courtesy of IFS Labs.

Enterprise software maker IFS envisions using AR to let field crew, technicians and maintenance workers automatically identify assets and review relevant data. Image courtesy of IFS Labs.“On a traditional flat screen, if you want to rotate the model to pinpoint a parting line or examine the area closely to see how light reflects around it, you can’t really do it with dexterity. In Meta, we can give you that ability,” says Pamplin. “For anyone working with 3D, they’ll have a huge competitive advantage with AR.”

“In our SOLIDWORKS software, we’re adding a button to let you export beautifully detailed CAD models as glTF (GL Transmission Format) or STL (STereoLithography) files, compatible with most game engines,” says Arnav Mukherjee, SOLIDWORKS development director for viewing and experimental technology.

From Heavy CAD to Lightweight Mesh

Uses of AR in engineering may be divided into two main categories: consumption and creation. In an AR setup for design consumption, engineers may view the digital prototype of a concept (for example, the proposed design for a new vehicle) for assessment. In an AR setup for design authoring, engineers may use a variety of operations to create the digital model of a design concept from scratch.

Autodesk offers Autodesk Forge, a platform that AR-VR developers can use to power applications with Autodesk technology. Image courtesy of Autodesk.

Autodesk offers Autodesk Forge, a platform that AR-VR developers can use to power applications with Autodesk technology. Image courtesy of Autodesk.For the most part, the first-generation AR-VR tools will focus on data viewing, not authoring. “I’ve been finding that consumption is the first stage. We still have to solve a lot of issues there,” says Mukherjee.

For AR viewing, the first hurdle is converting the fully detailed CAD data into a reasonably lightweight format suitable for AR. “Usually, the data coming from CAD is too heavy, too rich and in high resolution,” says Brian Pene, director of emerging technology at Autodesk. “It’s not easy to put this into a game engine and run it at 90 frames per second. People are now spending tens of thousands of dollars just to get the data into AR-VR apps.”

Sitting at the intersection of architecture, media and entertainment, and product design, Autodesk is in a position to harvest its game and filmmaking technologies to build an easy data pipeline from CAD to AR-VR. “We created a service in Autodesk Forge that lets you push a button to send your Autodesk Revit models [3D architectural models] into the cloud, and ‘automagically’ create an immersive VR experience,” Pene says. The same approach, he suggests, would make it possible to convert large mechanical assembly models to an AR- or VR-ready state.

Autodesk Forge, the company’s subscription-based software development platform, supports more than 50 3D file formats, including many associated with software developed by Autodesk rivals. The magic in automagic, Pene explains, is a blend of cloud connectivity, high performance computing (HPC) and artificial intelligence (AI).

Augmented Reality: An Untethered Future

The computing power required to deliver and sustain visual fidelity in AR-VR is intense. It usually demands the capacity of a midrange or top-tier professional workstation. This is why many AR-VR applications are not yet cordless today. Mobility usually comes in a cumbersome backpack, which you must strap to your back or carry with you as you navigate your virtual scene.

But this is bound to change in a couple of years. “The graphics capability—particularly, the GPU you can fit into a wearable—is not powerful enough today [for AR-VR],” says Meta’s Pamplin. To him, it makes sense to develop the Meta SDK to work with a desktop machine because “the current professional GPUs for the desktop matches what you’ll likely get in your pocket devices in the future. Within the next few years we’ll cut the cord.”

Hands-free Asset Management

As Bas de Vos sees it, enterprise resource planning (ERP) interfaces need not be locked up in a mind-numbing series of grids, columns and rows. As director of IFS Labs, a division of the enterprise software company IFS, de Vos is responsible for exploring future technologies that the company can benefit from.

“There’s a good business case for AR-powered ERP,” says de Vos. “With it, you can provide the field technicians and engineers with the right information at the right time, in the right context, without requiring them to go into the system to find it.”

For IFS customers, resources and assets could be airplane components, industrial machinery or oil rigs. “So imagine you can walk up to an asset wearing Microsoft Hololens,” says de Vos, “and it could recognize the asset you’re looking at, could pop up a list of relevant work orders, along with instructions on how to perform the maintenance required.”

Automatic asset recognition is the easy part. You could use barcodes, QR codes, beacons or a mix of the three to let mobile devices instantly recognize what it sees in the camera view. Such a solution can even be implemented affordably today with tablets and smartphones. But AR offers another advantage.

“With Hololens, you get the hands-free experience,” says de Vos. That’s important in IFS’s world, because field engineers need their hands to work on the asset. “You can also easily launch Skype from your headset, make a call and share your view with someone located remotely.”

With view sharing, an expert far away could direct a field technician or even a trainee-level user to perform certain advanced procedures without having to travel. “Customer satisfaction would be a lot higher if we can fix something right on the spot without going back to the office,” says de Vos.

In early prototypes, IFS can stream ERP and product lifecycle management (PLM) data stored in IFS applications seamlessly into Hololens. The data consolidation, however, could be challenging for firms that use systems and software from multiple competing vendors. “If an organization chooses to store information in 25 different sources, and those systems are not talking to one another, then it’s that much more difficult to get a single picture of the truth, so that’s something that needs to be considered continuously,” cautions de Vos.

Don’t Build Muscle Memory with Joysticks

NVIDIA describes its Project Holodeck as “a photorealistic, collaborative VR environment that incorporates the feeling of real-world presence through sight, sound and haptics.” If implemented effectively, the last item—haptic feedback—would make all the difference.

Matthew Noyes, the software lead at the NASA Johnson Space Center’s Hybrid Reality Lab, was one of the speakers at NVIDIA GTC. Discussing the use of AR for astronaut training, he pointed out, “We don’t want to just teach the astronauts how to use the tools, but we want them to develop the muscle memory of actually using the tool.”

Noyes and his team used 3D-printed replicas of the repair tools during the AR-driven training sessions to give the trainees a good feel for using the tool. “A maintenance drill used on the Hubble station for repair costs about a million dollars to manufacture. But a 3D-printed facsimile can be created with about $20 worth of plastic materials,” Noyes said. “The 3D-printed tool is hollow inside, so we can [add artificial weight] to make it weigh as much as the real thing.”

Currently, in most AR-VR setups, the visuals are stunningly realistic but the illusion is shattered once you reach out to touch a digital object. You can, for example, accurately judge the look of a luxury car’s leather-coated interior, but you won’t feel the texture, weight and stiffness as you would in the real world.

Joysticks and other standard devices are sufficient for navigating a 3D scene, but they are shaped differently than the real tools field technicians would use to perform the tasks. Therefore, developing joystick-based habits and intuitions could be detrimental in AR training of field work. Haptic feedback and 3D-printed replicas could add the missing layer of realism to such applications.

Holodeck is “built on an enhanced version of Epic Games’ Unreal Engine 4 and includes NVIDIA GameWorks, VRWorks and DesignWorks,” the company writes. In the demonstration at NVIDIA GTC, the company was able to show Holodeck handling a 50-million polygon 3D vehicle model.

Zero Learning-Curve 3D Modeling

Take a look at how a child plays with Play-Doh. When she wants a square block, she might pat the bottom, top and sides of the misshapen lump. When she wants a hole, she might poke through the surface with her fingertip. When she wants to smooth the block’s edges, she might rub off some materials with her fingers. That is, in a manner of speaking, a zero-learning curve modeling application.

“I think a Play-Doh-like interface would only work if the application gives tactile feedback. Otherwise, even if you get visual clues, it would feel strange or unnatural for the user,” says SOLIDWORKS’ Mukherjee. “Most CAD users will probably still use keyboard and mouse for now. But they should also have a way to easily press a button and see their design in AR-VR headsets.”

Meta’s Pamplin says, “I want a two-year-old or an 82-year-old to be able to put on our device and understand exactly how to use it right away. I want the digital tools to resemble tools in the real world. The digital sculpting tool should look like a real sculpting tool. It should not look like an abstract icon or menu.”

The emergence of affordable AR technology offers the tantalizing possibility to reinvent 3D CAD with a much more natural interface, to shed the menu-centric habits developed in the era of mouse and keyboards. But old habits are hard to break. So overcoming the keyboard shortcuts, now part of many CAD-savvy engineers’ muscle memory, may be harder than solving the technical issues of AR-VR.

More Info:

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

Kenneth Wong is Digital Engineering’s resident blogger and senior editor. Email him at [email protected] or share your thoughts on this article at digitaleng.news/facebook.

Follow DE