HPC Workflow Tips

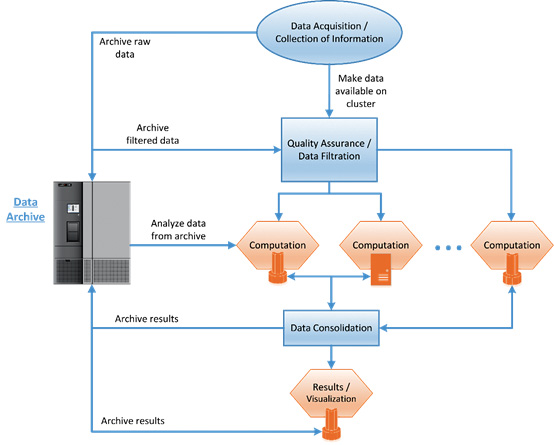

The general structure of the high-performance computing workflow is pictured. Image courtesy of Mellanox.

Latest News

November 1, 2016

The general structure of the high-performance computing workflow is pictured. Image courtesy of Spectra Logic.

The general structure of the high-performance computing workflow is pictured. Image courtesy of Spectra Logic.Today’s design environment has many new and emerging tools to help design engineers capitalize on a voluminous amount of data to design and build better products. One tool that brings many benefits is high-performance computing (HPC) and its ability to speed workflows.

Supercomputers and parallel processing techniques are at the center of an HPC workflow. They enable organizations to solve complex computational problems in less time, and visualize and predict things before they implement design solutions and ideas.

View and Predict Before the Build

“One common aspect to most HPC workflows is the notion of forward view, or prediction,” says Eric Polet, Emerging Markets program manager at Spectra Logic. “HPC systems are used to predict weather, climate, atomic reaction and more, so when developing a product, it allows you to view and predict the outcome of a system prior to going to a physical environment.

“As an example, let’s assume we are building an engine for a car,” Polet continues. “One key component of that engine would be the cylinder head. The cylinder head provides power to the engine and the emissions that will be put out by the engine. By simulating the cylinder head and the engine through a computer prior to going to tooling, an engineer can tweak parts and get the best performance with the lowest emissions.”

Polet posits that HPC enables processing of vast amounts of information to provide granular detail used to drive decisions for successful outcomes. Without HPC, a trial and error approach, or as he describes it “tribal knowledge” on engine combustion would be used.

“This same type of simulation is used for weather, climate and pandemic sciences that need a future view,” Polet adds. “As organizations implement a product development workflow, it is vital to confirm their infrastructure can grow with their organization, ensuring resources are maximized not just today, but for years to come. Regardless of when and how often data is read, it is vital that organizations have a reliable and affordable archive where data can be stored for future analysis and recalled whenever needed.”

Not long ago, HPC required more IT infrastructure. Large mainframe computers at colleges and universities were commonly used. Today, things are simpler.

For today’s engineer, HPC is much easier and more hassle-free than in the past—especially with on-demand, high-performance cloud services.

“They no longer have to wait for queued simulation jobs to run, or write complex scripts to do work,” says says Leo Reiter, CTO at Nimbix, an HPC cloud platform provider. “Modern platforms provide turnkey workflows for the simulation applications they’re using, but with a much larger scale than they may be used to. This means they can get their work done much faster, without learning new methodologies first, and at very economic prices without upfront commitments.”

HPC Product Development

Structuring a logical workflow to accommodate the processing requirements of their project is one challenge engineers face in utilizing HPC.

“Depending on the type of product, it’s best to identify which steps of the workflow actually require HPC, and take into account where data needs to be available at any given time,” says Reiter. “For example, for a typical manufacturing process, there is design, simulation and post processing. Typically, only simulation is the stage of the workflow that actually requires HPC. But if the data sets are large enough, it’s important that the data be near-line to each stage. For example, if leveraging cloud computing for HPC, it’s important that the cloud support all three steps of the workflow so that you don’t need to move data back and forth as part of the workflow. It’s also important that the platform support batch processing for the HPC parts so that you are not paying for setup and tear down time and effort as well.”

According to Scot Schultz, an HPC technology specialist at Mellanox, it’s best to apply boundaries to the applications required for the product development, and then look at the number of elements, network communication patterns and access to storage commonality for the application.

Building a network that allows HPC workflow and function is key, and achieving this often means removing clutter from the flow.

“For operations like Message Passing Interface (MPI), it’s best to manage this from the fabric, not the CPU,” says Schultz. “It really starts with one very basic idea—remove overhead that is not fundamental to a core element, and then, if possible, optimize and accelerate it. In other words, CPUs should be doing compute; not managing network communication—the network should be intelligent and powerful enough to handle the network functions needed across the I/O and the compute. Very similar for storage—leveraging native Remote Direct Memory Access (RDMA) storage can make the difference in a workflow’s time-to-solution.”

Data Size Matters

Attention should be directed to several areas when developing an HPC workflow. One of these areas is the size of your data set and what kind of cloud configuration will work best and easiest for that size.

Organizations that are best suited for public cloud and SaaS (Software-as-a-Service) are those with smaller data sets and low data analytics needs. With a pay-as-you-go, or subscription model, a public cloud is a more affordable option for storing, analyzing and sharing data. Paired with minimal startup costs and hardware needs, organizations can implement a full public cloud environment while meeting all necessary requirements. However, this changes as the data set grows.

“As data sets grow, and demand for data analytics increases, organizations find themselves moving to clustered file systems, located on premise,” says Polet. “These clustered systems provide almost infinite computing power, but with additional power comes added costs. For organizations utilizing clustered file systems, cloud computing and storage would be a far more expensive option, due to the amount of virtual machines that would be needed to perform in the cloud. Clustered systems have limitations in affordable storage capacity and ability to distribute data, which are important to factor into decisions. However, for organizations needing high data analytics, small data archives and limited distribution of content, a clustered system is ideal and the most affordable.”

For those with larger data sets where analytics and computation is more involved, a data center with large amounts of storage and substantial compute power to analyze their data is in order.

“If one of these organizations were to go to a fully public cloud infrastructure, the costs would be so enormous they may have to choose what data to keep and what computations to run,” says Polet. “With a clustered system, storage would be limited and the organization would have to decide what results to retain and what data is important. One limitation to data centers, as opposed to cloud, is the inability to share and distribute data. Often times, organizations are required to introduce additional hardware and software to perform these tasks. The benefits of a data center, however, are that organizations are able to store all their data, keep it for as long as they need and perform the required computations.”

Making HPC Work

HPC has come a long way. With the right workflow, it can be a game changer for today’s design engineering teams. Getting work done faster translates to savings and provides an attractive return on your investment in HPC.

“Remember the old adage, time is money?” asks Scott E. Grabow, HPC system administrator for BAE Systems’ Intelligence and Security sector. “HPC can potentially give you more time to use the knowledge you develop than a competitor. In terms of a product development cycle, that could mean you get a shorter product design cycle. You can take advantage of that cycle to improve quality, performance or give you a longer period of time to generate and drive the media buzz to your product. Your decisions can allow you to gain a larger market share than your competition by allowing you to sustain the conversation with your product.”

More Info

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

Jim Romeo is a freelance writer based in Chesapeake, VA. Send e-mail about this article to [email protected].

Follow DE