Fast App: Accelerating iSCSI SAN Storage

StarWind iSCSI SAN software, coupled with Mellanox Ethernet-based high speed infrastructure, accelerates storage solution networking connectivity.

Latest News

October 18, 2011

By DE Editors

Current data centers require frequent high-speed access between server and storage infrastructures for timely response to customers. Web-based service providers, such as infrastructure as a service (IaaS) or platform as a service (PaaS), provide high-speed access to their customers while keeping infrastructure costs low. For a cost-efficient and higher speed requirement, 40GigE infrastructure combined with software-based iSCSI storage provides an ideal solution.

StarWind SAN iSCSI software running over a Mellanox ConnectX-2 40GigE networking solution provides better performance, high availability (HA) and redundant iSCSI storage solutions at 40Gb/s bandwidth and higher IOPs. The installation time for StarWind iSCSI SAN is very short, and takes only a few minutes. It requires no reboot and is entirely plug-and-play with no downtime.

A Solution Emerges

Under Mellanox Enterprise Datacenter’s initiative, StarWind used Mellanox Zorro cluster (See: Mellanox.com/content/ pages.php?pg=edc_cluster), to run StarWind iSCSI Initiator on HP DL380 systems with Mellanox ConnectX-2 40GigE NICs. The servers were connected, as shown in the connectivity diagram (see Figure 1). Full performance benefits were realized over the maximum bandwidth allowed by the PCIe Gen2. A record level of 27Gb/s throughput and 350K IOPs were achieved. The higher speed solution provides faster access to the storage data in an iSCSI fabric.

Solution components include:

- 3 servers of Zorro (HP DL380 G6 with 2*167GB disks, 24GB RAM, 8 cores)

- 6 Mellanox HCAs with 40Gb/s per PCIe Gen2 slot single port, 2 HCAs in each server. F/W: 2.7.9470. Ordering Part: MNQH19-XTR

- Connected with 3 subnets (not 40GigE switch), copper QSFP cables

- OS: Windows 2008 Server R2

- OFED: driver version 2.1.3.7064

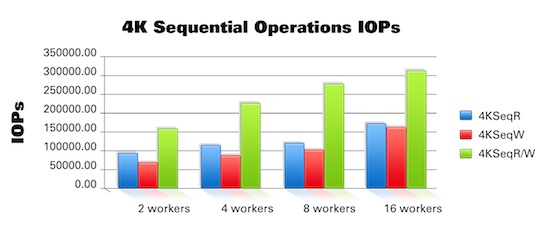

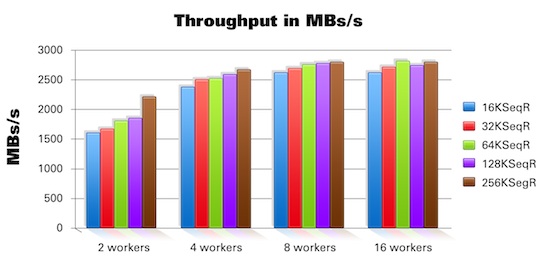

With non-HA configuration (the only node called HA 1 and the client were performing I/O through the single 40Gb/s connection in both directions utilizing full-duplex Ethernet) cluster got 25Gb/s of an iSCSI traffic (due to PCIe Gen 2 system limitations full 40Gb/s wire speed was not reached). Tests with updated PCIe Gen3 hardware wire speed of 40Gb/s over iSCSI can be achieved, without any change in networking hardware, software and StarWind iSCSI SAN software. 300K IOPs at 25Gb/s were achieved with 16 clients using StarWind iSCSI SAN software. This represents a superior performance gain over current iSCSI HBA solutions.

The full HA version (both with HA 1 and HA 2 nodes are processing requests served under Round-Robin policy) achieves the same results as the non-HA configuration but with more workers and deeper I/O queue, which is a shortcoming for Microsoft SW initiator. Every single write went through the wire twice: first from Client to HA 1 (or 2) and then from HA 1 (or 2) to HA 2 (or 1), partner HA node before it got acknowledged as “OK” to Client. Full HA versions can reach non-HA performance with heavy and non-pulsating I/O traffic. A full set of graphs for both IOPs and Gb/s are published below. System RAM was used as a destination I/O target, which mirrors the rising trend of SSD usage in storage servers. A traditional storage stack (another PCIe controller, set of hard disks, etc.) would only add latency to the whole system and limit performance.

Accelerated Cost-effective Storage Solutions

Consolidating over 40Gb/s networking solution for iSCSI in a virtual environment is recommended for blade server setup, as it will replace four 10GigE individual links. Trunking over 4X 10Gb/s links to achieve 40Gb/s does not work well for iSCSI, as 4KB iSCSI PDU will crawl through only two of four cards put into the trunk, leaving half of the theoretical bandwidth under-loaded. Increased latency with trunking and combined four 10Gb/s cards at a higher cost compared to single 40Gb/s makes consolidated 40Gb/s a more future proof option.

Higher ROI has been demonstrated:

- Fewer PCIe slots have been used to achieve the same bandwidth (one instead of four).

- Less cables between cluster nodes.

- Lower power solution (single silicon powered and one copper wire instead of four).

- Easier to install and manage.

- Superior scalability solution” higher bandwidth can be achieved using the same number of slots.

Mellanox says using StarWind iSCSI SAN software version 5.6 with Mellanox ConnectX-2 EN Adapters plus 10Gb/s or higher speeds (preferably 40Gb/s), for current and future x86 servers along with PCIe Gen2 and PCIe Gen 3-enabled systems, is a cost- and power-efficient software-based iSCSI end-to-end solution for virtualized and non-virtualized environments.

INFO

Mellanox

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

DE’s editors contribute news and new product announcements to Digital Engineering.

Press releases may be sent to them via [email protected].