A Wiser Icarus: Preventing a Repeat of Disaster

Absoft and Sandia lab tools help diagnose the cause of the Columbia shuttle disaster.

Latest News

June 1, 2005

By Jeff Livesay

As the Space Shuttle Columbia flew through California’s clear blue skies on its return from a successful mission on February 1, 2003, the four left-side temperature gauges suddenly failed. A minute later, rapid heat build-up caused buffeting to the left side of the hull. Four minutes after that, over New Mexico, Mission Control determined the buffeting was causing drag on the shuttle’s left side.

|

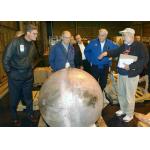

| Members of the Columbia Accident Investigation Board examine pieces of Columbia debris in the RLV Hangar. Click image to enlarge. |

This tragedy killed seven astronauts and left debris spread over hundreds of square miles. The question became, how do we determine the cause of this horrible accident so we can prevent it from ever happening again?

The solution to the mystery would require sophisticated analysis using software that emerged from an unlikely combination of partners. Fortran, the world’s oldest computer language, became the engine to drive Icarus, a cutting edge Direct Simulation Monte Carlo (DSMC) program originally developed at Sandia National Labs in Albuquerque, NM, for building missile defense systems. It is used to simulate the localized heating that takes place on a real warhead and a decoy, allowing sensors to distinguish between the two. The code has also found many practical applications on the earth’s surface over the past decade, including microelectronics manufacturing in near-vacuum conditions and in the operation of microelectronic mechanical systems (MEMS).

Engineers developed Fortran in the 1950s at IBM. A contraction of FORmula TRANslator, it eschews many of the modern software paradigms in favor of speed and simplicity. Today, Fortran remains without peer in pure numerical number crunching capabilities and is commonly used to develop computation-intensive programs ranging from cancer and human genome research to commodity trading. It’s that ability to rapidly crunch massive quantities of numbers that made it right for Icarus.

As the manufacturer of Fortran, Absoft Corporation was the logical choice for development tools. Timothy Bartel at Sandia National Laboratories originally selected the Absoft Fortran compiler and Fx2 debugging tools because combined they are the most complete solution for both Fortran, which makes up the vast majority of the Icarus code, and C, which is used on minor files. It’s a solid, versatile development tool kit, a key to productivity for an engineering or scientific code developer.

|

| This screen image shows Icarus being developed with Absoft tools. Click image to enlarge. |

The Absoft tools combine a powerful debugging environment, an editor, graphical interfaces for multiple compilers, and third-party tools that operate as plug-ins. To quickly identify problems, the debugger lets you easily define setpoints and watchpoints, step interactively through the code, and watch variables change. The particles that are being simulated in Icarus interact with a random number generator that selects different code paths. Certain operations might occur only once out of 100 or once out of 1,000 cycles. By setting watchpoints and setpoints, Bartel can run the code at full speed until it hits the path that he is interested in, then step through that section one line at a time until he finds the problem. The Absoft Linux Fortran compiler delivers real-world application performance in parallel environments.

Bartel developed Icarus, which uses advanced Direct Simulation Monte Carlo (DSMC) techniques, to accurately simulate fluid/air flows at or near the molecular level in low gas density conditions that exist at very high altitudes. The program would turn out to be one of the keys necessary to unravel the mystery surrounding the conditions during Columbia’s reentry. Fortran and Icarus Unravel the Columbia Disaster

While Icarus is used every day by scientists and engineers around the world, its most newsworthy application came when Sandia and Lockheed Martin, which manages the laboratories, offered Sandia’s technical support to NASA to determine the cause of the Columbia disaster.

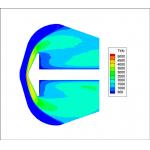

|

| This is a screen image of Icarus analyzing the space shuttle, specifically translational temperature comparisons. The plot compares the results using two different chemistry models. The issue under investigation is how to allocate the internal energy of the reactants to the products. |

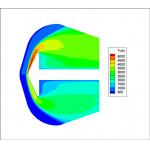

|

| This is a screen image of Icarus analyzing the space shuttle, specifically vibrational temperature comparisons. The plot compares the results using two different chemistry models. The issue under study is how to allocate the internal energy of the reactants to the products. |

Icarus was used to validate the hypothesis that the disaster was caused by a hole in the leading edge of one of the wings that had been created during takeoff. The hole didn’t cause a problem in space because the atmosphere was too thin to produce substantial heating. As the shuttle re-entered the atmosphere, however, the simulations showed that the air rushing into the hole heated up the aluminum cavity of the wing and eventually caused the wing to fail. Originally most of the people involved in the project thought the results would be different thinking that appreciable heating could not have occurred above 250,000 feet because of low air density.

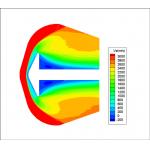

|

| This is a screen image of Icarus analyzing the space shuttle, specifically axial velocity comparisons. The plot compares the results using two different chemistry models. The issue under strudy is how to allocate the internal energy of the reactants to the products. |

Sandia researchers’ analyses and experimental studies supported the position that foam debris shed from the fuel tank that impacted the orbiter wing during launch was the most probable cause of the wing damage that led to the accident. As a result, the space agency has added many more high-resolution cameras to watch parts of the shuttle during launch and redesigned the way the tank attaches to the orbiter. It has also built a new robot boom giving astronauts the ability to use a camera to inspect all exterior surfaces from inside the shuttle and has decided to develop the ability to repair heat-protection tiles in orbit. Finally, a refurbished rudder speed brake flap in the tail will help slow orbiters during landings. In this way, specialized engineering and scientific codes such as Icarus solve complex problems of the 21st century.

Jeff Livesay is the Chief Operating Officer of Absoft Corporation in Rochester Hills, Michigan. He has 27 years of experience in the energy, software, telecommunications, and defense industries.

How the DSMC Method Works

The basic premise of direct simulation Monte Carlo (DSMC) is that the motion of simulated particles can be decoupled from their collisions over a time step. Icarus simulates a flow field using a number of computational particles that consist of all types of species such as radicals, ions, and molecules. The species type, spatial coordinates, velocity components, internal energy portioning, and weight factor of each computational particle are stored. As the particles move through the domain, they collide with one another and with surfaces. New particles may be added at specified inlet port locations, and particles may be removed from the simulation due to chemical reactions or through ports.

Since this is a statistical method in which the system evolves over time, a steady-state solution is an average of a number of solution time steps, or snapshots of the system, after the flow field has reached a steady state. The size of the time step is selected to be a small fraction of the mean collision time, or a fraction of the transit time of a molecule through a cell. During the motion phase, particles move in free molecular motion according to their starting velocity and any body forces acting on the particles, such as the Lorentz force on charged species. Icarus can also be run in a time accurate mode to model unsteady problems. —JL

DSMC Is Similar to CFD

While CFD (computational fluid dynamics) software, typically written in Fortran, is generally used to solve engineering and scientific fluid dynamics problems, it wasn’t the proper choice for investigating the initial phases of the Columbia disaster because typical CFD codes are appropriate for a range of fluid densities that were not part of the Columbia conditions. The Navier-Stokes equations upon which traditional CFD is based require sufficient gas densities for their assumptions to be valid. As density decreases, the DSMC method, which operates at or near the molecular level, becomes more appropriate, such as at altitudes above 250,000 feet. Though it might seem DMSC is valid for a broader range of problems than CFD, it isn’t more widely used because simulating the molecular interaction of representative particles is far more computation-intensive than solving the Navier-Stokes equations. Even though each computational particle represents a large number of molecules, real-world problems frequently require the simulation of more than a billion particles, while CFD simulations with more than a million cells are just now becoming commonplace. The result is that DMSC was rarely used until the early 1990s because it took months to reach a solution.

Icarus was originally developed for use in developing missile defense systems. It is used to simulate the localized heating that takes place on a real warhead and a decoy, allowing sensors to distinguish between the two. The code has also found many practical applications on the earth’s surface, including microelectronics manufacturing in near-vacuum conditions and in the operation of microelectronic mechanical systems (MEMS) where air densities are normal; but Navier-Stokes fails because the size of the devices approach the collisional distance of gas molecules.

Icarus first came into its own when the code was ported to the 1024 processor nCUBE machines over a decade ago. For the first time, it became possible to get the answers to problems in days rather than months. The Icarus Fortran code is now being run on Red Storm, one of the world’s five fastest supercomputers, which was recently assembled at Sandia using mostly off-the-shelf parts. The $90 million, 41.5 teraflops (trillion operations per second) machine was developed by Sandia and Cray Inc.—JL

Images from the Columbia

The first image in this article and the following images are courtesy of NASA. You can access additional NASA imagery as well as audio and videos of the Columbia Space Shuttle, its crew, flight, and crash investigation by clicking here. Go to the links marked STS-107 (01/2003).—DE

Left: View of debris from the Space Shuttle Columbia in the hangar at Barksdale Air Force Base, Shreveport, Louisiana. The debris was collected and cataloged prior to shipment to the Kennedy Space Center, Florida. Right: NASA Administrator Sean O’Keefe (second from right, blue jacket) is pictured in a hangar at Barksdale Air Force Base, where debris from the Space Shuttle Columbia is collected and cataloged by members of the Mishap Investigation Team. Click images to enlarge.

Left: Technicians at the Johnson Space Center in Houston team up to assemble a test article to simulate the inboard leading edge of a Space Shuttle wing as part of the Columbia Accident Investigation Board’s testing. Right: A single thermal protection system tile from the Space Shuttle Columbia that was recovered near Powell, Texas, as part of the debris recovery effort. Click images to enlarge.

Contact Information

Absoft Corp.

Rochester Hills, MI

Sandia National Labs

Albuquerque, NM

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

DE’s editors contribute news and new product announcements to Digital Engineering.

Press releases may be sent to them via [email protected].