Can VR client meetings become the next wave of WebEx and GoToMeetings? VizWorld seems to be betting this will be the case (image courtesy of VizWorld).

Latest News

July 27, 2017

Vizible - VR for Sales Professionals from WorldViz on Vimeo.

Next week, the sun-bathing City of Angels is set to host the annual conference on computer graphics wizardry, SIGGRAPH. The organizers of SIGGRAPH 2017 (Los Angeles, California, July 30 - Aug 3) promise “five days of research results, demos, educational sessions, art, screenings, and hands-on interactivity.” The last item—the hands-on interactivity—is bound to include some jaw-dropping AR-VR (augmented reality, virtual reality) setups.

The technology spawned in the entertainment sector, as a way to let gamers experience prehistoric landscapes teeming with dinosaurs or drive a tank and wreck harmless havoc in pixels, is growing up. It’s now mature enough to claim its rightful place in the professional market. Many are pivoting their offerings to the manufacturing, engineering, and design industries. Some are working hard to convince you that teleporting yourself into a virtual conference room with your boss, colleagues, or client is just as easy as speaking to them via WebEx or GoToMeeting.

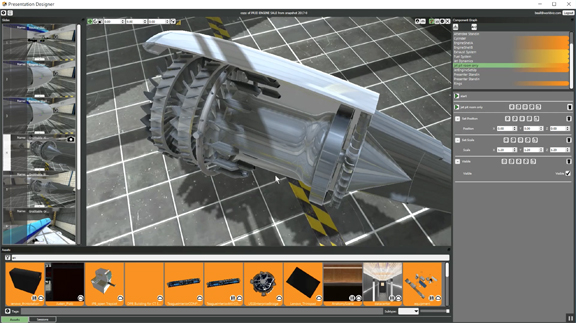

VizWorld promises an easy way to setup VR presentation with its Presentation Designer software (image courtesy of VizWorld).

VizWorld promises an easy way to setup VR presentation with its Presentation Designer software (image courtesy of VizWorld).VR for Sales and Design

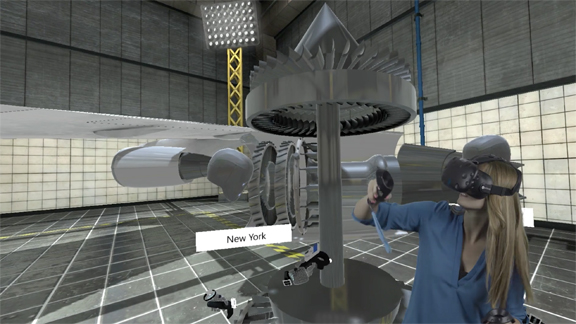

WorldViz, which has been in the VR market since 2002, is getting ready to unpack its product, formerly known as Project Skofield but now branded Vizible. The company is explicitly marketing it as a"VR solution for sales,” but points out in its press release “[Vizible] can also be used for remote collaboration, design review with distributed teams, and product training.”

WexEx, GoToMeeting, and other web-based conferencing tools become the norm because of ease of use and low barrier to entry. It’s free to use for most participants; you do not need any expertise beyond the skill to launch and use a web browser. At the present, even the easiest of the AR-VR applications require a good understanding of 3D formats and some hardware-configuration skills to deploy. This remains a significant hurdle to widespread AR-VR adoption.

“Little to no technical expertise is necessary to create and experience VR content using Vizible,” said WorldViz. “The solution’s Presentation Designer software allows sales professionals to quickly put together a VR presentation by dragging and dropping elements into the WYSIWYG editor. The Presentation Designer also allows the presenter to bring the presentation to life by adding animations, triggers, and user interaction. In addition to 3D content, traditional 2D content such as PDF training manuals or PowerPoint slides can be inserted to support the overall meeting.”

If a globally dispersed team chooses to work in AR-VR, the assets—the 3D files of the products, structures, and environments they want to analyze together—would have to be hosted on a server accessible to all team members. Vizible, for example, allows you to upload the shared assets to the cloud for collaboration sessions. With industries like automotive, aerospace, and heavy machinery, the size of the assets and the IP sensitivity could become an issue in AR-VR deployment via public cloud.

Can VR client meetings become the next wave of WebEx and GoToMeetings? VizWorld seems to be betting this will be the case (image courtesy of VizWorld).

Can VR client meetings become the next wave of WebEx and GoToMeetings? VizWorld seems to be betting this will be the case (image courtesy of VizWorld).Made for Machine Learning and Large Data Sets

NVIDIA, whose GPUs drive much of the visualization market, is heading to SIGGRAPH. Publishing a blog post before the show, the company wrote, “Predicting what the finished design of a product will look like is a challenge manufacturers, industrial designers and creative pros face every day ... Bombardier—one of the world’s top aerospace and transportation companies—provides a great example of how to do this right, by incorporating VR into its design process.”

In the recent years, NVIDIA has also been advocating the use of GPUs in compute-intensive AI development projects. With far more processing cores than a typical CPU, the GPU could be an effective coprocessor to digest the large a mount of sample data used in deep learning and machine learning, the company often pointed out.

“Dublin-based startup Artomatix is a leading example of this trend. Its AI-based approach to texture generation and style transfer helps automate many of the mundane, tedious and repetitive tasks artists and designers face,” wrote NVIDIA. ” Artomatix is set to demonstrate its Aging of 3D Worlds platform in NVIDIA’s booth. Artomatix’s deep learning technology runs on NVIDIA CUDA and NVIDIA GPUs.

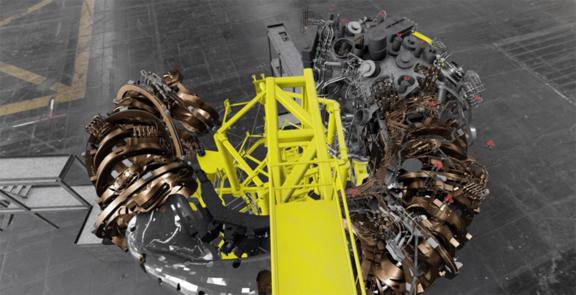

Long-time NVIDIA partner Dassault Systemes is also setting up shop inside the NVIDIA booth. The company plans to show “a proof of concept of how, while running dual Quadro P6000 [GPUs], engineers can natively edit massive geometry. In this case, a design for an experimental Stellarator fusion reactor, the largest in the world, developed by the Max Planck Institute in Dassault’s CATIA computer-aided design platform.”

The design for an experimental Stellarator fusion reactor, modeled in Dassault Systemes CATIA, in GPU-powered rendering, with the option to make real-time edits (image courtesy of NVIDIA).

The design for an experimental Stellarator fusion reactor, modeled in Dassault Systemes CATIA, in GPU-powered rendering, with the option to make real-time edits (image courtesy of NVIDIA).Installed VR-AR Experiences to Try

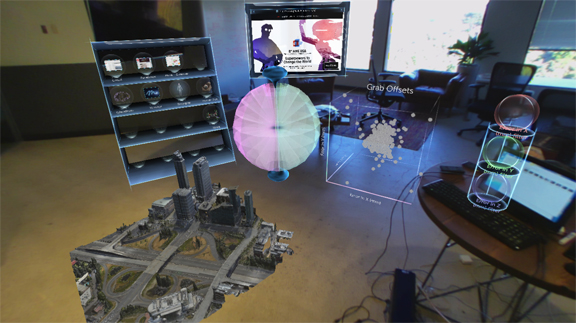

Last year, AR developer Meta‘s demonstration was part of SIGGRAPH’s highlights. The company is now preparing to launch a new version of its VR developer kit, dubbed Meta 2. This year, Meta 2 will be part of the Emerging Technologies Installations at SIGGRAPH 2017. You can use Meta 2 to “Touch Holograms in Midair,” as suggested by the name of one installation. “The current solutions from Microsoft, Daqri, or Meta enable users to place virtual elements in a static location beside real objects,” according to the organizers. “This demonstration adds a method for touching the objects, based on a touch development kit from Ultrahaptics, the only mid-air tactile feedback technology.”

Most AR-VR setups let you interact with digital objects via joysticks, mouse, and special batons. Therefore, the way you interact with the elements inside a digital vehicle, for example, is vastly different from how you would normally interact with the steering wheels, gear shafts, and dashboards in real life. Integrating tactile sensation adds another layer of realism to the experience. It can be crucial in virtual training applications where developing muscle memories and making subtle adjustments based on physical feedback is important.

DE editors will be attending SIGGRAPH 2017. For live news and tweets from the show, follow @DEeditor on Twitter or join DE’s Facebook community.

Can you touch and interact with holographic models? You can find out in Emerging Technologies Installations at SIGGRAPH 2017. Shown here is the view from inside Meta AR headset, featuring holograms (image courtesy of Meta).

Can you touch and interact with holographic models? You can find out in Emerging Technologies Installations at SIGGRAPH 2017. Shown here is the view from inside Meta AR headset, featuring holograms (image courtesy of Meta).

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News

About the Author

Kenneth Wong is Digital Engineering’s resident blogger and senior editor. Email him at [email protected] or share your thoughts on this article at digitaleng.news/facebook.

Follow DE